Rails ships with a default configuration for the three most common environments that all applications need: test, development, and production. That’s a great start, and for smaller apps, probably enough too. But for Basecamp, we have another three:

- Beta: For testing feature branches on real production data. We often run feature branches on one of our five beta servers for weeks at the time to evaluate them while placing the finishing touches. By running the beta environment against the production database, we get to use the feature as part of our own daily use of Basecamp. That’s the only reliable way to determine if something is genuinely useful in the long term, not just cool in the short term.

- Staging: This environment runs virtually identical to production, but on a backup of the production database. This is where we test features that require database migrations and time those migrations against real data sizes, so we know how to roll them out once ready to go.

- Rollout: When a feature is ready to go live, we first launch it to 10% of all Basecamp accounts in the rollout environment. This will catch any issues with production data from other accounts than our own without subjecting the whole customer base to a bug.

These environments all get a file in config/environments/ and they’re all based off the production defaults.

So we have something like this for config/environments/beta.rb:

# Based on production defaults

require Rails.root.join("config/environments/production")

beta_host_name = `hostname -s`.chomp[-1]

BCX::Application.configure do

# Beta namespace is different, but uses the same servers

config.cache_store = :mem_cache_store, PRODUCTION_MEM_CACHE_SERVERS,

{ timeout: 1, namespace: "bcx-beta#{beta_host_name}" }

# Each beta server gets its own asset environment

config.action_controller.asset_host = config.action_mailer.asset_host =

"https://b#{beta_host_name}-assets.basecamp.com"

endThis gives each beta server its own memcache namespace and asset compilation, so we can run different feature branches concurrently without having them trample over each other.

Since many of our associated services are shared between production and the beta/staging/rollout environments, we take advantage of the YML reference feature to avoid duplication:

production: &production

url: "http://10.0.0.1:9200"

beta:

<<: *production

rollout:

<<: *production

staging:

url: "http://10.0.1.2:9200"

Custom Configuration

To run six environments like we do, you can’t just rely on Rails.env.production? checks scattered all over your code base and plugins. It’s a terrible anti-pattern that’s akin to checking the class of an object for branching, rather than letting it quack like a duck. The solution is to expose configuration points that can be set via the environment configuration files.

Lots of plugins do this already, like config.trashed.statsd.host, but sometimes you need a configuration point for something existing in your code base or for a plugin that wasn’t designed this way. For that purpose, we’ve been using a tiny plugin called Custom Configuration. It allows you to do configuration points like this:

# Use cleversafe for file storage

config.x.cleversafe.enabled = true

# Use S3 for off-site file storage

config.x.s3.enabled = trueIt simply exposes config.x and allows you to set any key for a namespace and then any key/value pair within that. Now you can set your configuration point in the main environment configuration files and pull that data off inside your application code. Or use a initializer to configure a plugin that didn’t follow this style.

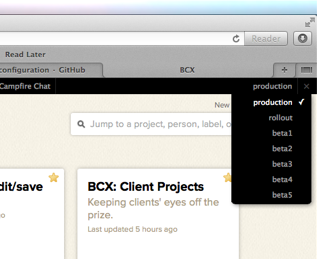

In-app stage switcher

In-app stage switcher

For 37signals employees, we expose a convenient in-app stage switcher to jump between the different environments and setups. That’s mighty useful when you want to checkout a new feature branch or ensure that everything got rolled out right.

Rollout to 10%

While the rollout servers are always ready, we only use them when a feature is about to go live. The process is to deploy the feature branch you’re about to merge to master to the rollout environment. Then you flip the switch with cap rollout tenpercent:enable, which instructs the load balancers to send 10% of accounts to the rollout servers. When you’re content that all is well with the feature branch, you merge it into master, deploy to production, and turn off the rollout again with cap rollout tenpercent:disable.

The great thing about doing it like this is that the enable/disable action is very fast. It’s not like the scramble to do a full capistrano rollback. This just ticks the load balancer to send some traffic or not. So the second you catch an issue, you can get the 10% back on regular production, fix the problem, and then try again. Great for your blood pressure levels!

Just do it

For a long time, all we had was the staging environment. But the addition of multiple, dedicated beta servers to test feature branches concurrently, and the rollout environment to deploy with more confidence, has been a big boost to our workflow. There’s not a lot of work in setting this up and Rails was built for it from the beginning. The defaults are just a starting point.

Gareth Rees

on 13 Jun 13Could you explain how you made the stage switcher?

Does the stage switcher just redirect to the URL of the selected stage (e.g. beta1.bascamp.com) or is something else going on behind the scenes?

Cheers

DHH

on 13 Jun 13Gareth, the stage switcher runs off a cookie (which is then picked up by the load balancer). But you could do subdomains as well.

Aitor García

on 13 Jun 13Thank you for sharing this!

I’d love to hear your opinion in environment-based vs. feature flippers (as flickr, etsy, etc. has been doing for a long time) strategies for the rollout scenario.

DHH

on 13 Jun 13I always want to leave the campsite in a better condition than I found it. To do that, I often refactor lots of code in the development of a new feature. When you’re not just adding to the pile, but rearranging it, it’s not the best idea to do that on production most of the time.

Additionally, while it’s great to use features in production for a bit before committing to their release, I also believe in clearing the decks and having as little discrepancy between the production version of Basecamp and the development version. That means an app not littered with dozens of features only visible to company workers. Work grows stale if it’s not shipped and it doesn’t provide any value to our customers before it is.

So that’s the general philosophy. But there’s also a matter of scale. We have less than 10 developers at 37signals. So we can’t work on dozens of features at once. If you have a hundred developers, all working on lots of features at the same time, maybe you have to accept the drawbacks of feature flippers. But that’s (thankfully, to me) not our scale.

Peer Allan

on 13 Jun 13I assume that for your beta sites you have to run their migrations on the production DB in order to test them properly. Do you have a process for dealing with using the beta environments with data migrations that could potential break production or do you just construct the migrations in such a way that both environments will function without impacting each other?

Attila

on 13 Jun 13Nicely written article, I really like your “Rollout” environment, would definitely consider putting something similar in place for our own apps.

One small thing; you may want to fix a tiny typo: “It allow you to do configuration points like this” where you mention the ‘Custom Configuration’ plugin.

—Attila

DHH

on 13 Jun 13Peer, we use staging for testing migrations since it doesn’t run on the production database.

Matt De Leon

on 13 Jun 13@Peer Allan, looks like David mentioned staging as a place to test database migrations. I’m guessing the process looks like Staging -> Beta -> Rollout -> Production in a situation like that.

David, to clarify, is your load balancer aware of the account ID for the incoming request? Or does it randomly send 10% of traffic to rollout?

DHH

on 13 Jun 13Matt, the account ID is part of all URLs in Basecamp, so it’s easy to route on.

Nikolay Shebanov

on 13 Jun 13I’ve always been interested – when you rollout a tenpercent release with new DB schema, how do you deal with storing (and merging) any new data?

DHH

on 13 Jun 13Nikolay, no silver bullet there. If you can’t make it backwards compatible, then you can’t do 10%.

Richard Kuo

on 13 Jun 13Any possibility we might see some kind of ‘rails generate environment NAME [options]’ in the future?

Greg

on 13 Jun 13Production data is in pre-production !

Oh dear Lord

Wael

on 13 Jun 13This looks great. One question though, why do you think you need both a staging environment AND a beta environment? It seems that the only requirement there is to be as close to a production env as possible, which you can achieve with one of them.

DHH

on 13 Jun 13Richard, there’s nothing in it. Add one file. Not worthy of a generator.

Wael, answered in the post. Staging is for database migrations running off a database backup. Beta is for living use against our real account.

Jarosław Rzeszótko

on 13 Jun 13I have written a gem for application configuration that is similar in style to the Rails configuration and to the referenced custom_configuration, but scales better to a larger number of settings by putting separate setting groups in separate files and allowing to overwrite the settings both per-environment and also just for the current installation:

https://github.com/jaroslawr/dynamic_configuration

Adam A.

on 13 Jun 13Thanks for this! Got me thinking of how I can improve as well.

What kind of load balancer are you using? It sounds quite robust—being able to make that 10% decision and handling the cookie-based stage switcher.

Donnie C.

on 13 Jun 13Looks like a great gem, @Jaroslaw.

DHH

on 13 Jun 13Adam, big honki’ F5s. Cost an arm and a leg, unfortunately. I do think lesser machines can do this too, though.

Rodrigo Rosenfeld Rosas

on 13 Jun 13I’ve already touched this configuration subject on rails-core mailing list once and I’d like to take the chance to do it again here…

I also manage several environments and I completely changed the way we manage our environments.

The reason is that it is pretty boring to create a new environment the standard way. You have to create a new environment under config/environments/xxx.rb, you need to add more lines to config/database.yml, config/mongo.yml, e-mail and so on…

Also, you end up having to use RAILS_ENV=xxx all the time when working on those environments… And I’m worried on what might happen if you forgot to use that env variable when running a db:migrate for instance…

So, instead I preferred to simply create one Ruby configuration file under config/settings/xxx.rb and link config/settings.rb to the mapped one for a particular directory. settings.rb is ignored on .gitignore. And I don’t have to worry anymore about RAILS_ENV or editing multiple files when creating a new environment. I just need to tweak a single Ruby file and it is pretty easy to inherit from another one using “require_relative ’./another_env’” for instance, and then define what changes from that environment…

The only exception here is that I always load config/settings/test.rb if Rails.env.test? basically (the check is actually a bit more complex than that since not all my tests load Rails).

I’d really love to see all those YAML settings files completely gone for a fresh Rails app. There’s no need for them and we could simply put all our settings in a single Ruby file, or split it in several files if we wanted…

Rene

on 14 Jun 13I’m curious how many (unit,integration,functional) tests basecamp has. Together with the ability to switch stages, I guess this must a “a hell a lot of” :)

Patrick Tulskie

on 17 Jun 13We’ve been using multiple production environments for a while for different things. One cool way to use them is to have environments that selectively load smaller portions of your application so you can cram many instances onto a single server.

The classic example is in background workers. With a “workers” environment, you can skip loading plugins, frameworks, and initializers (need to monkey patch for that) you don’t need and therefore keep your per-instance memory footprint lower and do more with a given box.

This discussion is closed.