My name is David Heinemeier Hansson, and I’m the CTO and co-founder of Basecamp, a small internet company from Chicago that sells project-management software and email services.

I first testified on the topic of big tech monopolies at the House Antitrust Subcommittee’s field hearing in Colorado just over a year ago, where I described the fear and loathing many small software makers have toward the app store duopoly.

How fees upwards of 30% of revenue, applied selectively, and in many cases capriciously, put an enormous economic burden on many small software businesses. How paired with the constant uncertainty as to whether the next software update will be rejected, or held for ransom, can put entire businesses in jeopardy.

I was then merely speaking on behalf of my many fellow small business owners. As someone who’d heard the tragic stories from app store duopoly victims, whispered out of fear of further retribution, for the better part of the last decade.

Little did I know that just six months later, Basecamp would be in its own existential fight for survival, after launching a new, innovative email service called HEY.com. Apple first approved our application to the App Store, only to revert themselves days later, after we had publicly launched to great critical acclaim. They demanded we start using their in-application payment system, such that they could take 30% of our revenues, or we’d be kicked off the App Store. A virtual death sentence for a new email service that was aiming to compete with the likes of Google’s Gmail and Apple’s own iCloud email hosting.

Against all odds, and due to Apple’s exceptionally poor timing and the bad PR that resulted from this skirmish happening during their yearly Developer Conference, we managed to beat back the bully, but so many other developers have tried the same and failed, or never dared try at all, and suffered in silence.

I concluded that original congressional testimony in January with a simple plea: “Help us, Congress. You’re our only hope.” Meaning, the market is not going to correct itself. The free market depends on legislative guardrails that prevent monopolies from exploiting their outsized power and preying on smaller competitors.

But when we were fighting for our survival and future over the stressful summer, whatever hope I had for congressional involvement seemed very far off. If your house is on fire, and you call for help, it’s no good if the red engine arrives after daffodils have started growing where your house once was.

While I still hope we’ll get relief from a federal level, there are no bills currently under consideration. No specific proposals on the table. So if and when that happens, it will be too late for the many businesses that either get crushed in the interim or never get started out of the fear and loathing outlined above.

I was extremely pleased to learn that this plea for help with the app store duopoly abuses was in fact being heard, it just so happened to be in the form of State Senate bills, like SB 2333. And not only had the plea been heard, but it had been answered in the most succinct and effective manner possible!

After the recitals, the 17 lines of SB 2333 read like music. Written in a language I can understand without hiring counsel to parse it for me. It almost seems too good to be true! But I sincerely hope that it is not. That you will listen to the small software developers from all over the country, who are tired of being bullied and shaken down by a handful of big tech monopolists out of Seattle and Silicon Valley.

We need a fair digital marketplace free of monopoly abuse as much in Chicago as in Bismarck. And when it comes to the app store duopoly, no single change will have a greater impact than giving small software makers like us a choice when it comes to in-app payment systems, and protection from retaliation, if we refuse the onerous deal the monopolists are offering.

Apple and Google would like to take credit for all the jobs and all the progress that has happened on top of their mobile platforms. But that’s a grotesque appropriation of the ingenuity and innovation that’s happening all over the country. It’s like if a shipping company wanted credit for all the products inside the containers it carried by rail or sea. Apple and Google may control tracks and shipping lanes, but without the work of millions of independent software makers, they’d have little to deliver to customers.

And handouts don’t help either. We’re not interested in a slightly lower rate on their obscene payment processing fees. We’re interested in choice. Unless we have choice, we’ll never have a fair hand to negotiate, and the market forces that have driven credit-card processing fees down to around 2% can’t work their magic.

North Dakota has the opportunity to create this level playing field, such that the next generation of software companies can be started there, and that if a team in Bismarck builds a better digital mouse trap, they won’t be hampered by abusive, extortive demands for 30% of their revenue from the existing big tech giants.

It’s simply obscene that a small software company that makes $1,000,000 dollars in revenue has to send a $300,000 check to Cupertino or Mountain View, rather than invest in growing their business, while Facebook makes billions off those same app stores without paying any cut of their revenues whatsoever.

You have the power to chart a new path for the entire country by taking care of software developers who already do or would like to call North Dakota home. It’s incredibly inspiring to see a State Senate that’s not afraid to take on the biggest, most powerful tech giants in America, and write plain, simple rules that force them to give the next generation a chance.

Thank you so much for your consideration.

]]>This includes us and our products at Basecamp. Therefore, we feel an ethical obligation to counter such harm. Both in terms of dealing with instances where Basecamp is used (and abused) to further such harm, and to state unequivocally that Basecamp is not a safe haven for people who wish to commit such harm.

Our full Use Restriction Policy outlines several forms of use that are not permitted, including, but not limited to:

- Violence, or threats thereof: If an activity qualifies as violent crime in the United States or where you live, you may not use Basecamp products to plan, perpetrate, incite, or threaten that activity.

- Doxing: If you are using Basecamp products to share other peoples’ private personal information for the purposes of harassment, we don’t want anything to do with you.

- Child exploitation, sexualization, or abuse: We don’t tolerate any activities that create, disseminate, or otherwise cause child abuse.

- Malware or spyware: Code for good, not evil. If you are using our products to make or distribute anything that qualifies as malware or spyware — including remote user surveillance — begone.

- Phishing or otherwise attempting fraud: It is not okay to lie about who you are or who you affiliate with to steal from, extort, or otherwise harm others.

Any reports of violations of these highlighted restrictions, or any of the other restrictions present in our terms, will result in an investigation. This investigation will have:

- Human oversight: Our internal abuse oversight committee includes our executives, David and Jason, and representatives from multiple departments across the company. On rare occasions for particularly sensitive situations, or if legally required, we may also seek counsel from external experts.

- Balanced responsibilities: We have an obligation to protect the privacy and safety of both our customers, and the people reporting issues to us. We do our best to balance those responsibilities throughout the process.

- Focus on evidence: We base our decisions on the evidence available to us: what we see and hear account users say and do. We document what we observe, and ask whether that evidence points to a restricted use.

While some violations are flatly obvious, others are subjective, nuanced, and difficult to adjudicate. We give each case adequate time and attention, commensurate with the violation, criticality, and severity of the charge.

If you’re aware of any Basecamp product (Basecamp, HEY, Backpack, Highrise, Ta-da List, Campfire) being used for something that would violate our Use Restrictions Policy, please let us know by emailing [email protected] and we will investigate. If you’re not 100% sure, report it anyway.

Someone on our team will respond within one business day to let you know we’ve begun our investigation. We will also let you know the outcome of our investigation (unless you ask us not to, or we’re not allowed to under law).

While our use restrictions are comprehensive, they can’t be exhaustive — it’s possible an offense could defy categorization, present for the first time, or illuminate a moral quandary we hadn’t yet considered. That said, we hope the overarching spirit is clear: Basecamp is not to be harnessed for harm, whether mental, physical, personal or civic. Different points of view — philosophical, religious, and political — are welcome, but ideologies like white nationalism, or hate-fueled movements anchored by oppression, violence, abuse, extermination, or domination of one group over another, will not be accepted here.

If you, or the activity in your account, is ultimately found in violation of these restrictions, your account may be closed. A permanent ban from our services may also result. Further, as a small, privately owned independent business that puts our values and conscience ahead of growth at all costs, we reserve the right to deny service to anyone we ultimately feel uncomfortable doing business with.

Thank you.

For further reference, our full list of terms are available here: https://basecamp.com/about/policies

]]>Because the mainstream story in web development of the past decade or so has been one of JavaScript all the things! Let’s use it on the server! Let’s use it on the client! Let’s have it generate all the HTML dynamically! And, really, it’s pretty amazing that you really can do all that. JavaScript has come an incredibly long way since the dark ages of Internet Explorer’s stagnant monopoly.

But just because you can, doesn’t mean you should.

The price for pursuing JavaScript for everything has been a monstrosity of modern complexity. Yes, it’s far more powerful than it ever was. But it’s also far more convoluted and time-consuming than is anywhere close to reasonable for the vast majority of web applications.

Complexity isn’t really a big problem if you’re a huge company. If you have thousands of developers each responsible for tiny sliver of the application, you might well find appeal and productivity in complicated architectures and build processes. You can amortize that investment over your thousands of developers, and it’s not going to break your back.

But incidental complexity can absolutely break your back if you’re a small team where everyone has to do a lot. The tools and techniques forged in the belly of a huge company is often the exact opposite of what you need to make progress at your scale.

This is what HTML Over The Wire is all about. It’s a celebration of the simplicity in HTML as the format for carrying data and presentation together, whether this is delivered on the first load or with subsequent dynamic updates. A name for a technique that can radically change the assumptions many people have about how modern web applications have to be built today.

Yes, we need a bit of JavaScript to make that work well enough to compete with the fidelity offered by traditional single-page applications, but the bulk of that can be abstracted away by a few small libraries, and not leak into the application code we write.

Again, it’s not that JavaScript is bad. Or that you don’t need any to write a modern web application. JavaScript is good! Writing a bit to put on the finishing touches is perfectly reasonable. But it needn’t be at the center of everything you do on the web.

When we embrace HTML as the format to send across the wire, we liberate ourselves from having to write all the code that creates that HTML in JavaScript. You now get to write it in Ruby or Erlang or Clojure or Smalltalk or any programming language that might set your heart aflutter. We return the web to a place full of diversity in the implementations, and HTML as the lingua franca of describing those applications directly to the browser.

HTML over the wire is a technique for a simpler life that’ll hopefully appeal to both seasoned developers who are tired of dealing with the JavaScript tower of complexity and to those just joining our industry overwhelmed by what they have to learn. A throwback to a time where you could view source and make sense of it. But with all the affordances to create wonderfully fluid and appealing modern web applications.

Intrigued? Checkout Hotwire for a concrete implementation of these ideals.

]]>“How do you validate if it’s going to work?”

“How do you know if people will buy it to not?”

“How do you validate product market fit?”

“How do you validate if a feature is worth building?”

“How do you validate a design?”

You can’t.

You can’t.

You can’t.

You can’t.

You can’t.

I mean you can, but not in spirit of the questions being asked.

What people are asking about is certainty ahead of time. But time doesn’t start when you start working on something, or when you have a piece of the whole ready. It starts when the whole thing hits the market.

How do you know if what you’re doing is right while you’re doing it? You can’t be. You can only have a hunch, a feeling, a belief. And if the only way to tell if you’ve completely missed the mark is to ask other people and wait for them to tell you, then you’re likely too far lost from the start. If you make products, you better have a sense of where you’re heading without having to ask for directions.

There’s really only one real way to get as close to certain as possible. That’s to build the actual thing and make it actually available for anyone to try, use, and buy. Real usage on real things on real days during the course of real work is the only way to validate anything. And even then, it’s barely validation since there are so many other variables at play. Timing, marketing, pricing, messaging, etc.

Truth is, you don’t know, you won’t know, you’ll never know until you know and reflect back on something real. And the best way to find out, is to believe in it, make it, and put it out there. You do your best, you promote it the best you can, you prepare yourself the best way you know how. And then you literally cross your fingers. I’m not kidding.

You can’t validate something that doesn’t exist. You can’t validate an idea. You can’t validate someone’s guess. You can’t validate an abstraction. You can’t validate a sketch, or a wireframe, or an MVP that isn’t the actual product.

When I hear MVP, I don’t think Minimum Viable Product. I think Minimum Viable Pie. The food kind.

A slice of pie is all you need to evaluate the whole pie. It’s homogenous. But that’s not how products work. Products are a collection of interwoven parts, one dependent on another, one leading to another, one integrating with another. You can’t take a slice a product, ask people how they like it, and deduce they’ll like the rest of the product once you’ve completed it. All you learn is that they like or don’t like the slice you gave them.

If you want to see if something works, make it. The whole thing. The simplest version of the whole thing – that’s what version 1.0 is supposed to be. But make that, put it out there, and learn. If you want answers, you have to ask the question, and the question is: Market, what do you think of this completed version 1.0 of our product?

Don’t mistake an impression of a piece of your product as a proxy for the whole truth. When you give someone a slice of something that isn’t homogenous, you’re asking them to guess. You can’t base certainty on that.

That said, there’s one common way to uncertainty: That’s to ask one more person their opinion. It’s easy to think the more opinions you have, the more certain you’ll be, but in practice it’s quite the opposite. If you ever want to be less sure of yourself, less confident in the outcome, just ask someone else what they think. It works every time.

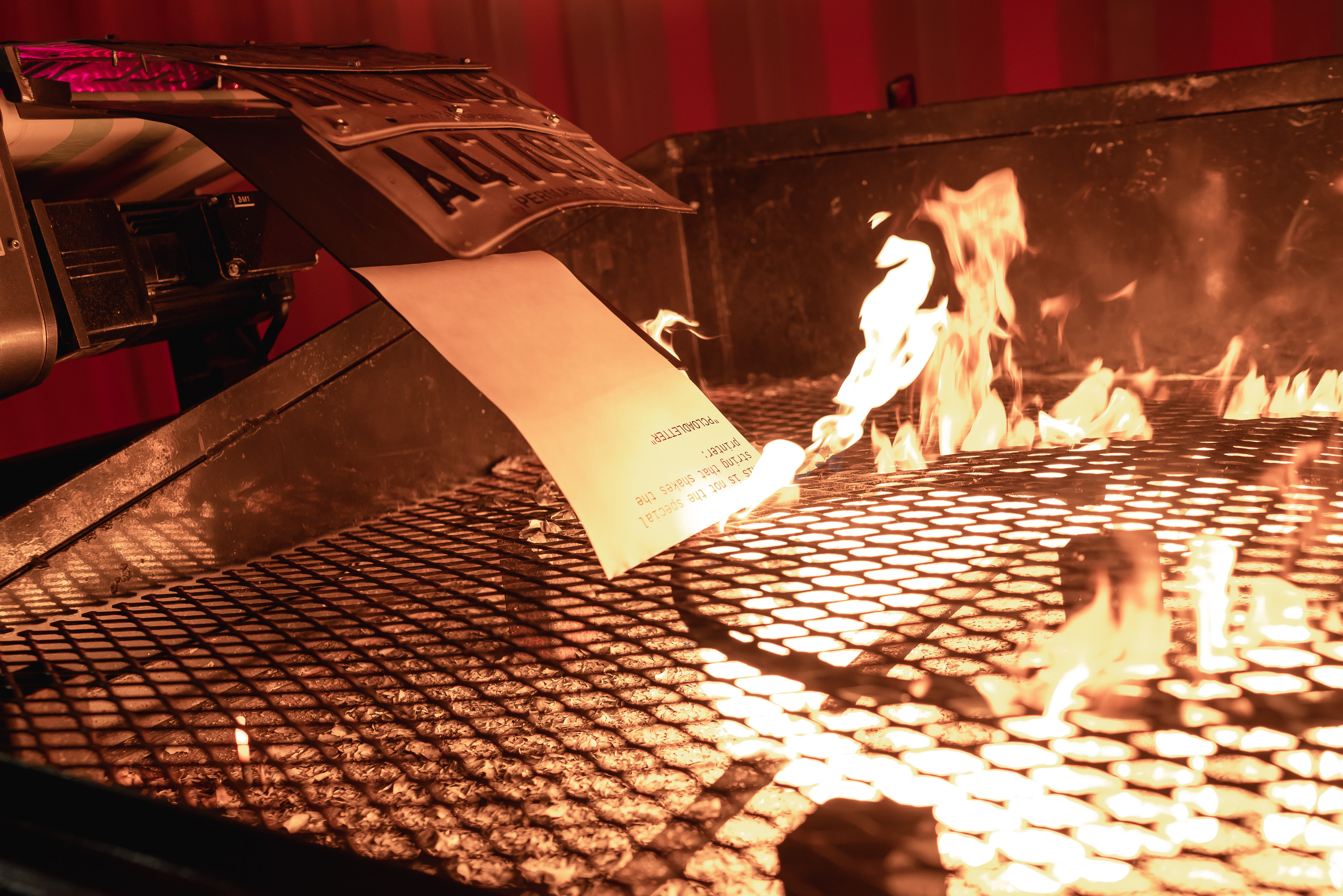

]]>What follows is far more than you ever want to learn about building an internet-connected dumpster fire.

The Contraption

We started with the simple concept of “a flaming dumpster you can email.” The idea was that your email would cause a dumpster somewhere in the world to erupt with flame and consume your email in a moment of remote catharsis. We wanted anyone in the world to be able to play and not have to sign up for anything.

(Real quick: It goes without saying that this is a highly dangerous project with many exotic ways it could go very wrong. We worked with professionals to build this thing and would not have attempted it without them. This is not a complete manual on how to build a safe propane-powered flaming dumpster and this article should only be enjoyed as entertainment. Please don’t build this.)

Fire

Fuel selection was one of our first decisions. We needed a large flame that was instant, controllable, and predictable. This meant a gaseous flame we could turn on and off instead of a solid fuel like wood that would burn uncontrollably. Natural gas is plentiful in cities but delivered at too low of a pressure (~2 psi) to make a convincing, rolling flame. We wanted a real “burning garbage” look to deliver that 2020 essence.

We landed on propane because it’s also readily available but comes out of the tank at 100-200 psi depending on temperature. Propane is also a relatively “clean burning” fuel with the only byproducts being carbon dioxide and water vapor. We rented a 500 gallon liquid propane tank and connected it to a 25 psi regulator before a super heavy duty rubber hose that leads to the dumpster.

I reached out to my good friend Josh Bacon to help us with the flame effect portion of this project. He’s built a number of fire-enabled contraptions and works annually as a lead fire safety inspector at Burning Man. Leaning on existing knowledgeable people is good when you don’t have the time and burn cream to learn the hard way.

Two 120v industrial solenoids, or electronically-controlled valves, control the flow of propane at both the tank and at the back of the dumpster. When our microcontroller, a Raspberry Pi 4, calls for fire it opens the valves allowing the flow of propane from the tank. These valves are designed to “fail closed” meaning if we remove power the valves will snap shut by default.

Aboard the dumpster the propane flows to 3 loops of heavy copper tubing that acts as our flame effect. Tiny pinholes are drilled into the underside of the tubing to function as jets for the burning propane. We put these on the underside of the tubing so the flames would have a natural rolling look. The entire effect is wired with stainless steel safety wire to the underside of an expanded metal mesh grate that sits in a custom-fabricated ⅛” steel plate tray. The entire tray sits on welded supports so we can lift it out with a forklift for maintenance.

The propane is ignited by two redundant hot surface igniters – or HSIs – screwed into the effect tray. These HSIs remain on all of the time so we don’t have to manage a separate ignition circuit that may fail. If the igniters somehow failed and the propane was allowed to flow freely it would only be for short 30-second bursts into open atmosphere. As a safeguard we have an operator running the dumpster that’s tasked with overseeing the operation and hitting the “emergency stop” if anything goes awry.

Printer

With our dumpster largely sorted and connected, we looked towards the printer. Since this was going to be outdoors we couldn’t use an inkjet for fear the ink itself would freeze. A laser printer is warm by design so I searched for a commercial-grade printer that could deal with the duty cycle of running 8-10 hours per day. I settled on an HP M255dw as it was both affordable and toner was in wide availability. There were likely dozens of better options, but done is better than perfect.

The problem with most laser printers is they print “face down.” We needed our prints “face up” so you can see them on camera. Rather than trying to chase down the perfect printer we simply feed it a 2-page PDF with a blank first page so the output is “face up.” More on that later.

One of the more challenging parts of this project was getting this printer to successfully eject the paper onto the conveyor using gravity alone. We continually increased the printer-to-conveyor angle until the paper reliably slipped out naturally onto the belt. In hindsight a printer that ejected out of the front instead of the top would’ve been significantly easier to design around.

The printer is driven by the Raspberry Pi over ethernet using CUPS on Debian.

Conveyor

If you were trying to get this project done quickly and easily you wouldn’t use a conveyor. Getting this done the efficient way would probably involve the printer being located above the dumpster with a ramp leading to the flames. A ramp can never fail, works every time, and requires no code or power.

There is only one good reason to do it the way we did it: Conveyors are way cooler.

The conveyor we’re using is a Dorner that came out of a pharmaceutical manufacturing plant. In its former life it was part of a cap sorting machine that put pill bottle caps into neat little rows so they could be applied with great care. We removed all of that carefully-calibrated accessory structure and attached a giant steel leg to boost it up to dumpster height.

The conveyor was originally controlled by an intensely complicated industrial control system located in the box beneath the printer. After spending about 6 hours gazing into this abyss of relays and wires I decided it was far easier to splice into the control box on the side instead and simulate the start-stop buttons with relays. When we call for “start” our relay closes the “start” circuit at the button on the conveyor and the magic begins. To stop the conveyor we open the “emergency stop” circuit momentarily which stops the conveyor. It’s not the “proper” solution but it works which makes it right.

Enclosure

This mess of wires, propane, dumpster, and fire needed to be protected from the elements. It couldn’t be indoors because the fire would set off the fire alarm – among other obvious complications. We could’ve potentially figured out ways to vent the dumpster with a restaurant grill hood or similar, but that would’ve turned into a multi-thousand dollar engineering project with more electrical systems, fans, cinder block modifications and other high-effort things. So outside it went.

It was our industrial designer friend Eric Froh that suggested we hack the side off of a 20ft shipping container to make a somewhat weather-resistant enclosure. We hired Ben Wolf of Ferrous Wolf to modify our shipping container to fit the bill. Ben and his compatriots cut the side off of the container and reinforced it with structural steel. They custom-fabricated the chimney from the former container sides and reinforced it with angle iron and expanded metal. The whole operation was completed in about a week.

The container shields most of the project from the weather with a covered chimney out of the top. We enclosed the printer and electronics in a plexiglass and aluminum box to keep the rest of the weather off of the sensitive pieces.

The interior of the container was a drab beige color so we enlisted the help of our designer friend Monica Dubray to both choose and paint the container and the dumpster. The rest of the color is added by W2812b LEDs driven by a Pixelblaze.

Cameras and Streaming

The project is streamed during operating hours on 3 networked cameras. The main camera is a Panasonic CX350 while the other two are Panasonic CX10s. I chose these cameras because they can natively stream over hardwired ethernet via your choice of RTMP – the most common streaming protocol originally created by Macromedia – or NDI. NDI allows you to stream up to 4k over a local network and control camera functions remotely.

We ended up using NDI to feed the cameras into Open Broadcaster Software – OBS – which enabled us to create a picture-in-picture display showing all 3 cameras on 1 stream. OBS is running on a 2019 Macbook Pro on the network.

We send our composited stream out to Restream.io so it can simultaneously broadcast it to Twitch, IBM Video and Youtube. We originally had it just going to IBM Video (formerly Ustream) which was embedded on hey.science but we quickly shot beyond our allotted 5,000 viewer hours in the first few minutes of the project. At approximately 47k viewer hours, or $9,400, we made a fast switch to Twitch to avoid another giant hosting bill.

We still pipe video to IBM Video because that’s where our clips are made and sent to email submitters after their message gets torched. More on that below.

Controller

The microcontroller that runs the whole dumpster-side operation is a standard Raspberry Pi 4 8GB with a relay hat. The relay hat directly switches both of our 120v solenoids for gas control and the low voltage controls to manipulate the conveyor. It has been astoundingly robust and workable to our needs.

The Code

While I’m now knowledgeable in most things dumpster it’s our Lead Systems Administrator Nathan Anderson that developed all of the backend code that makes the dumpster work. I asked him for some brief words on what makes it tick:

“Despite running HEY.com, our amazing new email product, we were unwilling to run this experiment through our production servers. So we used postfix to forward [email protected] over to an AWS SES endpoint. There, we would do a preliminary screening, rejecting mail that failed DKIM, SPF, DMARC, SPAM, and VIRUS checks. We would also check the headers against our moderation rules (custom sender/domain exclusion checks). From there, we’d drop the email into a S3 bucket.

The S3 bucket is configured to send an SNS notification whenever an object is written to the bucket. This notification triggers another Lambda function that does several more steps:

- Screen for size. Since the SES Lambda action does not receive information about the total size of the email, we have to check the object size in S3. SES allows email up to 10MB in size, but we limit the dumpsterfire to 5MB.

- Render the email. We use the mail gem to extract text parts or images. This detection is brittle, as all email parsing is. I initially wanted to extract our email processing/rendering routines from Hey, but our time constraints ruled that out, and we went with a naive approach.

- Write the simplified job object (sender, rendered content, Hey status) to S3, and pop a message into the screening queue, and another message on our reply queue.

After the emails are rendered, they’re almost ready to print. We have secondary screening queues where our rules are re-applied. This queue is designed to re-scan the jobs based on our moderation rules. After the screener lambda processes the jobs, they’re put into the moderation queue.

From here, we’re using node-red to handle the environment on the Raspberry Pi. We’ve got a moderation page that shows the Operator the content of the email, and provides them with buttons to:

- Print – Puts the message into a print queue. (VIP/Regular)

- Print Now – Puts the message into the Alpha queue.

- Skip – Drops the job, and deletes the content from S3.

- Skip and Block Sender/Domain – Drops the job, and adds the sender/domain to our moderation rules.

If the Operator notices there is a stream of messages from a single email address or domain, they’re able to add that sender/domain to our moderation rules, and we can re-scan the moderation queue, by sending the jobs in the moderation queue back through the screener.

The printing happens in a loop, and pulls messages from the 3 priority-based queues, Alpha -> VIP -> Normal. When the RaspberryPi pulls the job, it reads the body from S3, and then processes the email into a printable PDF via img2pdf or paps. Due to the way most printers output paper, we need to abuse the duplex function and generate a 2 sided PDF with a blank first page so it comes out with the text facing up.

Once we have the PDF, we use zuzel-printer, a node.js wrapper around `lp` to send the job to the printer, and tell us when it’s finished printing. After `lp` is done, we use onoff + setTimeout, to handle triggering our relays for the belt start/stop and triggering the fire.

In a perfect world, we’d use a sensor (machine vision detection, optical switch, etc…) to just keep the belt on until the paper was in the correct spot, but again, time constraints prevented us from using that method, and again, we used the naive approach of timing the belt speed. (Please don’t tell my FLL kids that I relied on timing for this…😅)

We’re also using those same timings to drive our video api. After we stop the fire, and then stop the recording, we tag the recording we just made with the job id, and store the video id in our job object that is written into the Completed Queue in AWS.

This queue handles cleanup in S3, and puts the final message on the complete-reply queue.

Both the reply and complete-reply queues are consumed by processes in our datacenter to send email replies out via [email protected].”

If you’d like to get the actual code for this project we made it available via a public repository here: https://github.com/basecamp/dumpsterfire-2020

Why

Most built objects are rarely as simple as they seem on the surface – even a dumpster fire. I could easily write a 600-page epic on the things we’ve learned behind each individual system on this project.

You’re likely asking yourself, “Why go into excruciating detail about propane, shipping container architecture, and conveyor belt control schema – aren’t you working at a software company?”

The truth is the vast majority of these sorts of marketing activations are done by 3rd party marketing agencies. Some people somewhere at Brand X have a half dozen long meetings with Agency Y and talk about this big attention-grabbing thing they’re going to do for user growth. The agency finds anonymous, knowledgeable builders to put the thing together, drags it out into the public square, and slaps the decals for Brand X on it. Invoices go out, impressions are garnered, rinse repeat. You could swap out the stickers and make it about Brand Z next week. Nobody would know the difference.

A project like our dumpster fire would be nearly impossible to pitch in-house at a giant publicly-held company. You can hear it now: “It involves actual fire? That’s too risky. We don’t know how to do that. I don’t even know where we’d start. I don’t get it.”

We built this at Basecamp because it was fun. We got to work with physical parts, build and refine unpredictable machines, and make a few hundred thousand people laugh along the way. Is this going to be a good business move? We’ll see. Right now we know for sure that it was entertaining for both us and our audience. You can’t say the same thing about banner ads.

We used our in-house skills and passions to make this big, silly thing happen. The parts we weren’t great at – fabrication, flame effects, industrial design – were hired out to local artists directly at a thriving wage.

Why build an email-connected dumpster fire? Why not.

Credits:

Metal Fabrication: Ben Wolf and Ferrous Wolf

Industrial Design: Eric Froh

Colors and Paint: Monica Dubray

Flame Effects: Josh Bacon

HERL Website: Adam Stoddard, Marketing Designer at Basecamp

Developer: Nathan Anderson, Lead Systems Administrator at Basecamp

Photography: Derek Cookson

Chief Cook and Bottle Washer: Andy Didorosi, Head of Marketing at Basecamp

PS: I did a tech walkthrough of the project you can watch below:

- Developer makes a code change in a git branch and pushes it to GitHub.

- An automated build pipeline run is kicked off by a GitHub webhook. It builds some Docker images and kicks off another build that handles the deploy itself.

- That deploy build, well, it deploys — to AWS EKS, Amazon’s managed Kubernetes offering, via a Helm chart that contains all of the YAML specifications for deployments, services, ingresses, etc.

- alb-ingress-controller (now aws-load-balancer-controller) creates an ALB for the branch.

- external-dns creates a DNS record pointing to the new ALB.

- Dev can access their branch from their browser using a special branch-specific URL

This process takes 5-10 minutes for a brand new branch from push to being accessible (typically).

Our current setup works well, but it’s got two big faults:

- Each branch needs its own ALB (that’s what is generated by the Ingress resource).

- DNS is DNS is DNS and sometimes it takes a while to propagate and requires that we manage a ton of records (3-5 for each branch).

These faults are intertwined: if I didn’t have to give each branch it’s own ALB, I could use a wildcard record and point every subdomain on our branch-deploy-specific-domain to a single ALB and let the ALB route requests to where they belong via host headers. That means I can save money by not needing all of those ALBs and we can reduce the DNS-being-DNS time to zero (and the complexity of external-dns annotations and conditionals spread throughout our YAML).

(While waiting a few minutes for DNS to propagate and resolve doesn’t sound like a big deal, we shoot ourselves in the foot with the way our deploy flow works by checking that the revision has actually been deployed by visiting an internal path on the new hostname as soon as the deploy build finishes, causing us to attempt to resolve DNS prior to the record being created and your local machine caching that NXDOMAIN response until the TTL expires.)

Before now, this was doable but required some extra effort that made it not worthwhile — it would likely need to be done through a custom controller that would take care of adding your services to a single Ingress object via custom annotations. This path was Fine™️ (I even made a proof-of-concept controller that did just that), but it meant there was some additional piece of tooling that we now had to manage, along with needing to create and manage that primary Ingress object.

Enter a new version of alb-ingress-controller (and it’s new name: aws-load-balancer-controller) that includes a new IngressGroup feature that does exactly what I need. It adds a new set of annotations that I can add to my Ingresses which will cause all of my Ingress resources to be routing rules on a single ALB rather than individual ALBs.

“Great!” I think to myself on the morning I start the project of testing the new revision and figuring out how I want to implement this (using it as an opportunity to clean up a bunch of technical debt, too).

I get everything in place — I’ve updated aws-load-balancer-controller in my test cluster, deleted all of the branch-specific ALIAS records that existed for the old ALBs, told external-dns not to manage Ingress resources anymore, and setup a wildcard ALIAS pointing to my new single ALB that all of these branches should be sharing.

It doesn’t work.

$ curl --header "Host: alb-v2.branch-deploy.com" https://alb-v2.branch-deploy.com

curl: (6) Could not resolve host: alb-v2.branch-deploy.comBut if I call the ALB directly with the proper host header, it does:

$ curl --header "Host: alb-v2.branch-deploy.com" --insecure https://internal-k8s-swiper-no-swiping.us-east-1.elb.amazonaws.com

<html><body>You are being <a href="https://alb-v2.branch-deploy.com/sign_in">redirected</a>.</body></html>(╯°□°)╯︵ ┻━┻

I have no clue what is going on. I can clearly see that the record exists in Route53, but I can’t resolve it locally, nor can some DNS testing services (❤️ MX Toolbox).

Maybe it’s the “Evaluate Target Health” option on the wildcard record? Disabled that and tried again, still nothing.

I’m thoroughly stumped and start browsing the Route53 documentation and find this line and think it’s the answer to my problem:

If you create a record named *.example.com and there’s no example.com record, Route 53 responds to DNS queries for example.com with

NXDOMAIN(non-existent domain).

So off I go to create a record for branch-deploy.com to see if maybe that’s it. But that still doesn’t do it. This is when I re-read that line and realize that it doesn’t apply to me anyway — I had read it incorrectly the first time, I’m not trying to resolve branch-deploy.com. (My initial reading was that *.branch-deploy.com wouldn’t resolve without a record for branch-deploy.com existing).

Welp, time to dig into the RFC, there’s bound to be some obscure thing I’m missing here. Correct that assumption was.

Wildcard RRs do not apply:

– When the query is in another zone. That is, delegation cancels the wildcard defaults.

– When the query name or a name between the wildcard domain and the query name is know to exist. For example, if a wildcard RR has an owner name of “*.X”, and the zone also contains RRs attached to B.X, the wildcards would apply to queries for name Z.X (presuming there is no explicit information for Z.X), but not to B.X, A.B.X, or X.

Hmm, that second bullet point sounds like a lead. Let me go back to my Route53 zone and look.

┬─┬ ノ( ゜-゜ノ)

Ah, I see it.

One feature of our branch deploy system is that you can also have a functioning mail pipeline that is specific to your branch. To use that feature, you email [email protected]. To make that work, each branch gets an MX record on your-branch.branch-deploy.com.

Here-in lies the problem. While you can have a wildcard record for branch-deploy.com, if an MX record (or other any record really) exists for a given subdomain and you try to visit your-branch.branch-deploy.com, that A/AAAA/CNAME resolution will not climb the tree to the wildcard. 🙃

This is likely a well-known quirk (is it even a quirk or is it common sense? it surely wasn’t common sense for me), but I blew half a day banging my head against my desk trying to figure out why this wasn’t working because I made a bad assumption and I really needed to vent about it. Thank you for indulging me.

]]>Note: I edited this post on Nov 5, 2020 to include the prices paid for all carbon offsets and explain a little more about the 7,000 tCO₂e cumulative carbon footprint following a question from a reader. Thanks!

Basecamp has purchased 7,000 verified carbon credits to offset our first twenty years of business (1999-2019)

By purchasing carbon credits, we financially support projects that sequester additional emissions to compensate for Basecamp’s historical direct and indirect emissions.

Many have written on the promise and perils of carbon credits. I’ll sum it by saying: carbon credits are not a panacea to the climate crisis, but they are part of the path to net zero emissions. As a company, we still have a lot of work to do to chart a viable path to reducing our overall emissions such that we’re only offsetting what we cannot mitigate.

As we researched carbon credits, we used the following core tenets to guide our decision. These tenets build on standard guidelines for high-quality carbon credits, as explained in the helpful Carbon Offset Guide from the Greenhouse Gas Management Institute and the Stockholm Environment Institute, with additional factors in recognition that efforts to reduce carbon emissions do not exist in a vacuum. Environmental sustainability intersects with many other issues, from economic justice to international systems that have long disadvantaged nations that now disproportionately face the negative impacts of climate change.

Standard guidelines:

- Additionality: some assurance that the sequestered and/or avoided carbon emissions are higher than the business-as-usual baseline. For forestry projects for instance, you can look at whether the adjacent land have been converted into farms or commercial timber plots. Or for alternative energy projects, you assess financial feasibility in the absence of carbon credit financing.

- Reasonable Estimation: a carbon credit is 1 tCO₂e sequestered or avoided. To get from a project design and implementation to credits registered and sold, you need data: for instance, about the kinds of trees planted and how much carbon those species sequester at different ages. You also need to account for what happens if something goes wrong: e.g. a wildfire or displacement of emission-generating projects to other areas. There are methodological standards for quantifying carbon credits for different kinds of projects. Each project we’ve purchased carbon credits from meets at least one if not two of those standards.

- Permanence: because the effects of greenhouse gases in the atmosphere are long-term, we also need long-term sequestration of carbon. Biosphere-based projects such as forestry carry higher risk for reasons ranging from wildfires to competing uses of land. That’s why it’s important to consider protections put in place (e.g. conservation easements), buffer supplies, and ongoing monitoring to mitigate the risk.

- Exclusive: we want to make sure we’re buying carbon credits that have not already been claimed by others. Each project we considered works with an official registry that handles the accounting to make sure double-counting doesn’t happen.

- Co-benefits: besides carbon sequestration and/or avoidance, do the projects have other benefits such as preserving or restoring biodiversity? Income generation for the local community?

Additional tenets:

- Some US presence: the US and other developed nations have contributed far more to the current climate crisis than emerging nations. I felt it was a moral imperative for Basecamp, an American company, to invest in some carbon credits generated from US-based projects as a result.

- Community-centered: how money flows matters. We sought out projects where the majority of the financial benefit from the carbon credits went to the community putting in the work.

- Transparent platforms: some carbon credit projects work directly with small-volume purchasers but others instead rely on middle-layer brokers that connect individual buyers to projects. We evaluated broker platforms on whether they emphasized mitigation first and then offsetting, how they approached teaching their potential customers about carbon credits, and how transparent they were about their finances.

Earlier this year, we calculated our 2019 carbon footprint in detail. We also roughly estimated our cumulative footprint based on key evolutions to the company’s infrastructure and staffing since 1999, rounding up generously to err on the side of over-compensating rather than under-compensating.

To offset Basecamp’s historical emissions from 1999-2019, we purchased credits from the following portfolio of projects:

- 2,500 credits from Klawock Heenya Corporation via Cool Effect (US) for $10.99/credit

The Klawock Heenya Corporation is owned and operated by indigenous Alaskan Natives. The corporation owns land in Alaska, given back by the US government as part of the Alaska Native Claims Settlement Act of 1971. Rather than commercially logging the forest on that land, the corporation has created a carbon credit project so that their community can earn a living while stewarding the forest. We purchased these credits via Cool Effect, a nonprofit platform focused on connecting individuals and businesses with high-quality carbon credit projects. - 2,500 credits from TIST directly (Uganda) for $12/credit

TIST stands for The International Small Group and Tree Planting Program. It’s a program as old as Basecamp: founded in 1999. The program works with self-organized smallholder farmer groups that decide to plant indigenous trees on their land, reforesting otherwise degraded and unused land that was previously logged. Today, over 90,000 smallholder farmers take part in TIST across Tanzania, Uganda, Kenya, and India, earning income through the carbon credits and the literal fruits of their labor. We directly purchased our carbon credits from TIST as the option was available. - 2,000 credits from Bhadla Solar Power Plant via GoClimate (India) for $6.33/credit

We wanted to diversify our portfolio of carbon credit projects and investing in more renewable energy infrastructure was an appealing alternative. India has an aggressive renewable energy strategy and the Bhadla Solar Power Plant is a big part of it. The government has taken barren land in an area that’s typically 47℃ (116℉) and begun converting it into the world’s largest solar park. We purchased these credits via GoClimate, a Swedish enterprise that operates with fantastic transparency.

We’ve also invested $100k to permanently sequester 116tCO₂ through Climeworks

Humanity has under a decade to get to net zero emissions and stay within the 1.5°C carbon budget. To stay within our budget, we’ll likely need technologies that don’t yet exist at scale today. Climeworks directly captures carbon dioxide from the air and, in partnership with CarbFix, sequester that CO₂ into stone. What’s particularly appealing about this sort of technology is the much smaller land and water footprint requirement than other sequestration methods. Climeworks has a detailed scale-up roadmap in place, which should eventually lower the cost-per-ton sequestered to ~$100 and make the technology widely attainable. We’re happy to be an early purchaser to help with this work.

Our work and learning continues

Since our public commitment to getting to carbon negative, we’ve continued to connect with other companies and groups that have been doing this work and are at various parts of their journey. I want to give a shout-out to the ClimateAction.tech community. I also want to thank my colleague Elizabeth Gramm for helping vet projects; Stacy Kauk for sharing more about Shopify’s thinking behind carbon credit purchases; Trevor Hinkle and David Mytton for sharing research and thoughts on the carbon footprint of cloud services; and the fine folks at Whole Grain Digital, whom I haven’t had the pleasure of speaking with directly but am a fan of for their Sustainable Web Manifesto and excellent newsletter on digital sustainability.

Here at Basecamp, we still have work to do. While we’ll continue to purchase carbon credits to offset emissions moving forward, mitigation is the more critical part of the work. In 2020, our carbon footprint will be lower than 2019’s. However, that reduction is largely because of the global pandemic, not conscious decisions we’ve made. We’ll need to make those decisions deliberately after a vaccine has been well-distributed and we need to get more granular, reliable data behind our largest emission driver: cloud hosting. As we do the work, we’ll share more updates.

]]>Since the day we launched it, we’ve aimed to take the security bug bounty program public—to allow anyone, not just a few invited hackers, to report vulnerabilities to us for a cash reward. We want to find and fix as many vulnerabilities in our products as possible, to protect our customers and the data they entrust to us. We also want to learn from and support the broader security community.

We’re happy to announce that we’re doing that today. The Basecamp security bug bounty program is now open to the public on HackerOne. Our security team is ready to take vulnerability reports for Basecamp 3 and HEY. Bounties range from $100 to $10,000. We pay more for more severe vulnerabilities, more creative exploits, and more insightful reports.

Here are some of the high-criticality reports we’ve fielded via the security bug bounty:

- Jouko Pynnönen reported a stored cross-site scripting (XSS) vulnerability in HEY that lead to account takeover via email. We awarded $5,000 for this report.

- Hazim Aslam reported HTTP desynchronization vulnerabilities in our on-premises applications that allowed an attacker to intercept customer requests. We awarded $11,437 in total for these reports.

- hudmi reported that the AppCache web API (since deprecated and removed from web browsers) could be used to capture direct upload requests in Basecamp 3. We awarded $1,000.

- gammarex reported an ImageMagick misconfiguration that allowed remote code execution on Basecamp 3’s servers. We awarded $5,000.

Check out the full program policy on HackerOne. For information on what to expect when you report a vulnerability, see our security response policy. If you have any questions, don’t hesitate to reach out to [email protected].

]]>To us, expedited delivery meant overnight delivery. That’s what we had in our head. Our experiences elsewhere equated expedited as overnight, but expedited isn’t overnight – it just means faster, prioritized, enhanced, sooner. But it doesn’t mean overnight. Expedited is relative, not absolute. If standard shipping takes 7 days, expedited could mean 5.

Of course, as you’ve probably guessed, the stuff we thought would come overnight didn’t come overnight. A harsh call the next day to the vendor ultimately got us the hardware overnight the next day, but we lost a day in the exchange.

What we had in front of us was an illusion of agreement. We thought a word meant one thing, the other side thought it meant something else, and neither of us assumed mismatched alignment on the definition. Of course we agreed on what expedited meant, because it was so obvious to each of us. Obviously wrong.

This happens all the time in product development. Someone explains something, you think it means one thing, the other person thinks it means something else, but the disagreement isn’t caught – or even suspected – so all goes as planned. Until it goes wrong and both sides look at each other unable to understand how the other side didn’t get it. “But I thought you…” “Oh? I thought you…” “No I meant this…” “Oh, I thought you meant that…”. That’s an illusion of agreement. We covered the topic in the “There’s Nothing Functional about a Functional Spec” essay in Getting Real.

We knew better, but we didn’t do better.

Next time you’re discussing something with someone — inside or outside your organization — and you find the outcome contingent upon a relative term or phrase, be sure to clarify it.

If they say expedited, you say “we need it tomorrow morning, October 3. Will we have it tomorrow, October 3?”. That forces them into a clear answer too. “Yes, you’ll have it tomorrow, October 3” or “No, we can’t do that” or whatever, but at least you’re funneling towards clarity. If they say “Yes, we’ll expedite it” you repeat “Will we have it tomorrow, October 3?” Set them up to give you a definitive, unambiguous answer.

And remember, while we now know that “expedited” is relative, “overnight” can be too depending on where someone’s shipping something from, what time zone they’re in, their own internal cutoffs for overnight shipping, etc. Get concrete, get it in writing, and get complete clarity. Slam the door shut on interpretation. Get definitive.

]]>So when he asked me to help him with something, I jumped at the chance. In this case, it was writing a foreword for his new book Demand-Side Sales 101: Stop Selling and Help Your Customers Make Progress. Bob and I have talked sales for years, and I’m so pleased his ideas are finally collected in one place, in a form anyone can absorb. I highly recommend buying the book, reading the book, absorbing the book, and putting some new ideas in your head.

To get you started, here’s my foreword in its full form:

—

I learned sales at fifteen.

I was working at a small shoe store in Deerfield, Illinois, where I grew up. I loved sneakers. I was a sneakerhead before that phrase was coined.

I literally studied shoes. The designs, the designers, the brands, the technologies, the subtle improvements in this year’s model over last year’s.

I knew it all, but there was one thing I didn’t know: nothing I knew mattered. Sure it mattered to me, but my job was to sell shoes. I wasn’t selling shoes to sneakerfreaks like me, I was selling shoes to everyday customers. Shoes weren’t the center of their universe.

And I wasn’t alone. The companies that made the shoes didn’t have a clue how to sell shoes either.

Companies would send in reps to teach the salespeople all about the new models. They’d rattle off technical advancements. They’d talk about new breakthroughs in ethylene-vinyl acetate (EVA) which made the shoes more comfortable.

They’d talk about flex grooves and heel counters and Texon boards. Insoles, outsoles, midsoles.

And I’d be pumped. Now I knew everything I needed to know to sell the hell out of these things.

But when customers came in, and I demonstrated my mastery of the subject, they’d leave without buying anything. I could show off, but I couldn’t sell.

It wasn’t until my manager encouraged me to shut up, watch, and listen. Give people space, observe what they’re interested in, keep an eye on their behavior, and be genuinely curious about what they wanted for themselves, not what I wanted for them. Essentially, stop selling and start listening.

I noticed that when people browsed shoes on a wall, they’d pick a few up and bounce them around in their hand to get a sense of the heft and feel. Shoes go on your feet, but people picked the shoe with their hands. If it didn’t feel good in the hand, it never made it to their foot.

I noticed that if someone liked a shoe, they put it on the ground next to their foot. They didn’t want to try it on yet, they simply wanted to see what it looked like from above. Companies spend all this time making the side of the shoe look great, but from the wearer’s perspective, it’s the top of the shoe against their pants (or socks or legs) that seem to have an outsized influence on the buying decision.

I noticed that when people finally got around to trying on a shoe, they’d lightly jump up and down on it, or move side-to- side, simulating some sort of pseudo physical activity. They were trying to see if the shoe “felt right.” They didn’t care what the cushioning technology was, only that it was comfortable. It wasn’t about if it “fit right,” it was about if it “rubbed wrong” or “hurt” or felt “too hard.”

And hardly anyone picked a shoe for what it was intended for. Runners picked running shoes, sure, but lots of people picked running shoes to wear all day. They have the most cushion, they’re generally the most comfortable. And lots of people picked shoes purely based on color. “I like green” was enough to turn someone away from a blue shoe that fit them better.

Turns out, people had different reasons for picking shoes. Different reasons than my reasons, and far different reasons than the brand’s reasons. Hardly anyone cared about this foam vs. that foam, or this kind of rubber vs. that kind. They didn’t care about the precise weight, or that this brand shaved 0.5oz off the model this year compared to last. They didn’t care what the color was called, only that they liked it (or didn’t). The tech- nical qualities weren’t important – in fact, they were irrelevant.

I was selling all wrong.

And that’s really what this book is about. The revelation that sales isn’t about selling what you want to sell, or even what you, as a salesperson, would want to buy. Selling isn’t about you. Great sales requires a complete devotion to being curious about other people. Their reasons, not your reasons. And it’s surely not about your commission, it’s about their progress.

Fast forward twenty-five years.

Today I don’t sell shoes, I sell software. Or do I?

It’s true that I run a software company that makes project management software called Basecamp. And so, you’d think we sell software. I sure did! But once you meet Bob Moesta and Greg Engle, you realize you probably don’t sell what you think you sell. And your customers probably don’t think of you the way you think of yourself. And you almost certainly don’t know who your competition really is.

Over the years, Bob’s become a mentor to me. He’s taught us to see with new eyes and hear with new ears. To go deeper. To not just take surface answers as truth. But to dig for the how and the why—the causation. To understand what really moves someone to want to make a move. To understand the events that drive the purchase process, and to listen intently to the language customers use when they describe their struggles. To detect their energy and feel its influence on their decisions.

Everyone’s struggling with something, and that’s where the opportunity lies to help people make progress. Sure, people have projects, and software can help people manage those projects, but people don’t have a “project management problem.” That’s too broad. Bob taught us to dig until we hit a seam of true understanding. Project management is a label, it’s not a struggle.

People struggle to know where a project stands. People struggle to maintain accountability across teams. People struggle to know who’s working on what, and when those things will be done. People struggle with presenting a professional appearance with clients. People struggle to keep everything organized in one place so people know where things are. People struggle to communicate clearly so they don’t have to repeat themselves. People struggle to cover their ass and document decisions, so they aren’t held liable if a client says something wasn’t delivered as promised. That’s the deep down stuff, the real struggles.

Bob taught us how to think differently about how we talk, market, and listen. And Basecamp is significantly better off for it. We’ve not only changed how we present Basecamp, but we’ve changed how we build Basecamp. We approach design and development differently now that we know how to dig. It’s amazing how things can change once you see the world through a new lens.

Sales is everything. It’s survival. From selling a product, to selling a potential hire on the opportunity to join your company, to selling an idea internally, to selling your partner on this restaurant vs. that one, sales touches everything. If you want to be good at everything else, you better get good at this. Bob and Greg will show you how.

]]>