We don’t need to wait for the singularity before artificial intelligence becomes capable of turning the world into a dystopian nightmare. AI-branded algorithms are already serving up new portions of fresh hell on a regular basis. But instead of worrying about run-away computers, we should be worrying about the entrepreneurs that feed them the algorithms, and the consumers who mindlessly execute them.

It’s not that Elon Musk, Stephen Hawking, et al are wrong to ponder whether Skynet might one day decide that humankind is a bug in the code of the universe that should be eliminated. In much the same way that evangelicals aren’t necessarily wrong to believe that the rapture will at some point prove the end of history. Having faith in supernatural stories about vengeful deities condemning us all to an eternity of misery is a bedrock pastime since the cognitive revolution. Precisely because there’s no scope for refuting such a story today.

It’s just that such a preoccupation with the possible calamities of tomorrow might distract us from dealing with the actual disasters of today. And algorithmic disasters are not only already here, but growing in scale, impact, and regularity.

A growing body of work is taking the algorithms of social media to task for optimizing for addiction and despair. Whipping its users into the highest possible state of frenzy, anxiety, and envy. Because that’s the deepest well of engagement to draw from.

We’re made increasingly miserable because connect-the-world imperialists are unleashing machine learning on our most vulnerable and base impulses. A constant loop of refinement that prods our psyche for weak spots, and then exploits them with maximal efficiency. All in the service of selling ads for cars, shampoo, or political discord.

Such exploitive self-optimizing AI is hardly constrained to social media, though. Although companies like Facebook are probably using it to inflect the broadest harm on the most people at the moment.

Yet however depressing it is to learn about how Facebook finds ways to deduce our precise mental state — and then agitate it further — there’s at least some plausible deniability. It’s possible to imagine that Zuckerberg and Sandberg really do believe that they’re actually doing something good for the world, even if evidence to the contrary is mounting at a rate much faster than they both can both feign surprise and outrage.

It’s harder to argue for plausible deniability when airlines employ AI-branded algorithms to spread families out across the plane, lest they pay a specific-seating ransom fee.

Or that the revenue-maximizing AI that runs the driver incentive programs for ride-sharing companies aren’t preying specifically on common cognitive frailties.

But at least those algorithms actually kinda work, however diabolical their purpose. That’s bad, but it’s a known kind of bad. A bad that you can reason about.

The AI apocalypse that’s really scaring me at the moment is the snake-oil shit show that is Predictim. Here’s a company that preys on the natural fears of parents everywhere of leaving their child with an abusive caretaker to sell get-the-fuck-outta-here abusive vetting services. This is their pitch:

Predictim is the Tool All Parents Need

221. It is a simple number that can mean nearly anything, right?

Well, in this case, it means how many charges a nightmare babysitter is facing out of rural Pennsylvania for abusing and torturing children she and a friend were in charge of watching.

A mother needed to go out of town, so she simply leaned on a person she’d trusted her children with in the past.

This time, however, the babysitter was out for pain.

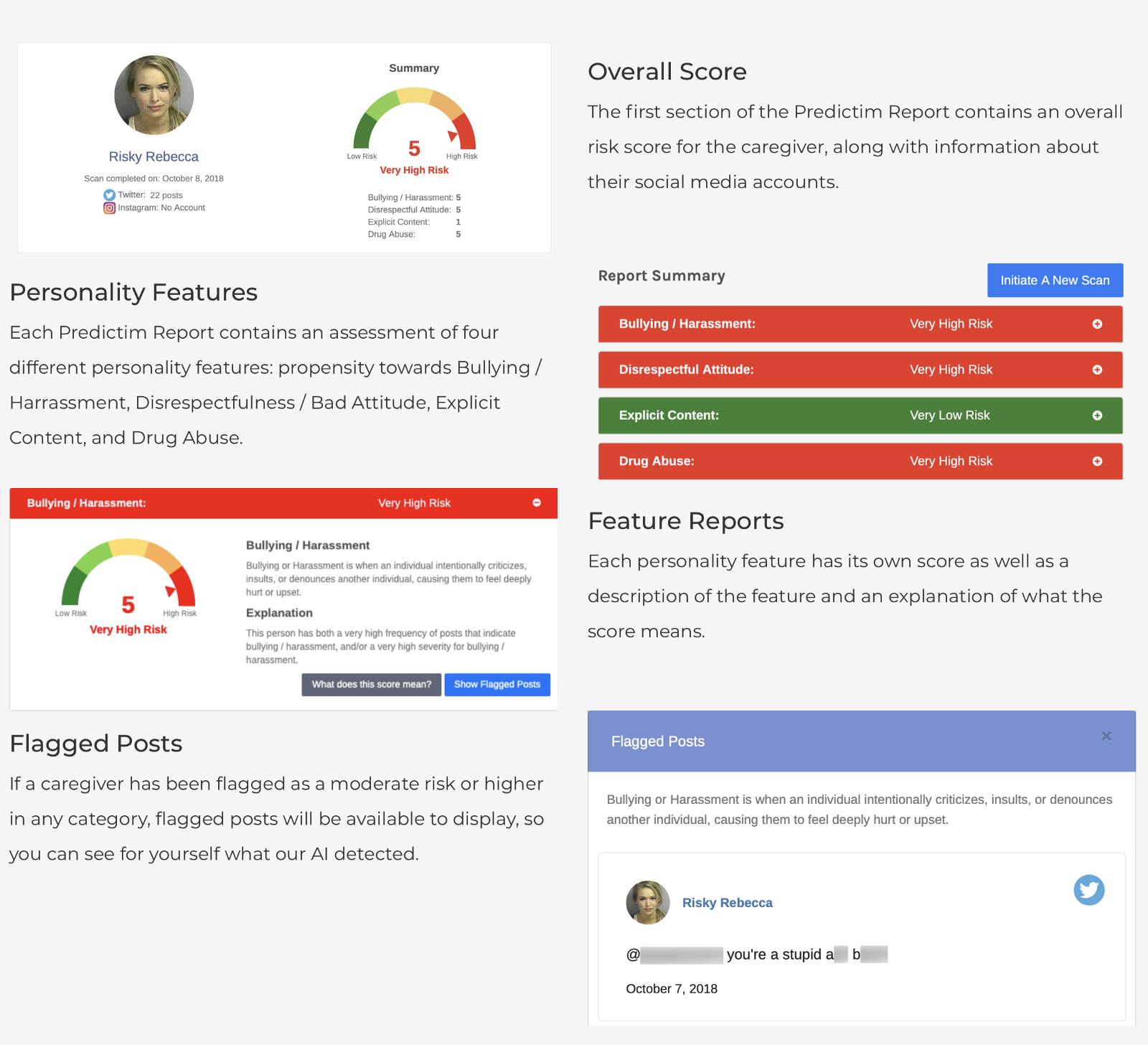

Probably frightened now? If you have kids, of course you are. So now you’re in the perfect state of mind to buy their truly dystopian huckster solution: Predictim is going to ask potential babysitters to hand over their logins to all their social-media accounts such that the black-box AI algorithm can analyze it for drug use, explicit content, and bullying or disrespectful behavior. The output is a single, final one-out-of-five score for how “risky” the babysitter is deemed to be (which isn’t shared with the candidate).

It’s hard to know where even to begin with this fresh hell. But let’s pick the core idea that AI can deduce whether someone has a “disrespectful attitude” with any level of certainty based on 22 twitter posts. Setting aside the ludicrous lack of a significant statistical sample, just try to imagine diagnosing sarcasm, justified outrage, in-group jokes, quotes, or any of the million others forms of expression that a tweet might take that AI is not within a hundred miles of being able to reliably deduce the intent or justification behind. Hell, I have my tweets misunderstood by highly-educated humans half the time!

So that’s the first conclusion: There’s no way this even fucking works! Simply no way. How on earth can a company like Predictim sell and market a solution like this as though it does? In the same way horoscopes get away with it in the newspaper. By essentially declaring that this is not actual advice and should be regarded as for entertainment purposes only. Or in Predictim’s own words:

Disclaimer: This analysis is based solely on an analysis of the potential caregiver’s social media accounts to which they gave us access, which may or may not accurately reflect the caregiver’s actual attributes. As a result, we cannot provide any guarantee as to the accuracy of the analysis in the report or whether the subject of this report would be suitable for your needs… We are not responsible or liable in any manner for the performance or conduct of any caregivers or for your interactions or dealings with them. ALL USER OF THIS REPORT OR SERVICE IS AT YOUR SOLE AND EXCLUSIVE RISK.

This is of course a complete contradiction to what their marketing material claims:

There are lots of ways to find someone to look after children –agencies, online marketplaces and recommendations from friends and family. However, many of these online market placesdon’t provide comprehensive vetting, and don’t take responsibilityfor the actions of their sitters.

Predictim is going to keep your family safe, or not, whatever. It’s going to ensure that you don’t hire Risky Rebecca, or not, maybe, perhaps, we don’t know! This is that infamous Silicon Valley style of promising the world yet accepting zero responsibility for the consequences of its actions.

Let’s talk about those consequences. Asking babysitters, who may even be minors, to hand over the keys to their private social media accounts is not only a despicable violation of their privacy, it’s also extremely ripe for abusive and exploitive behavior.

Given such high criticality — dealing with the private and perhaps even intimate data of babysitters — you’d at the very least want some iron-clad assurances about how that data is stored and used. I mean, that still wouldn’t make this in any way alright, but let’s just play along for a second. What assurances do Predictim offer that this data is secure and protected?

“If we ever leaked a babysitter’s info, that would not be cool,” Simonoff said.

No, that really wouldn’t be cool, Joel. But forgive me if I don’t have such high confidence in the moral integrity of a college grad who’s about the same age as Zuckerberg was when he pondered why the “dumb fucks” trusted him with their information.

ZUCK: yea so if you ever need info about anyone at harvard

ZUCK: just ask

ZUCK: i have over 4000 emails, pictures, addresses, sns

FRIEND: what!? how’d you manage that one?

ZUCK: people just submitted it

ZUCK: i don’t know why

ZUCK: they “trust me”

ZUCK: dumb fucks

Yet, this is even worse. Much worse. These babysitters may not even be college age yet. And let’s see what’s in the CTOs head when he thinks about the “explicit content” that should ding someone’s score on Predictim:

A picture of a girl in a bikini.

What odds would you put on the prospect that Predictim’s technical team has built an Uber-style God View that allows them to ogle those “explicit content” hits of girls in bikinis? I’d say better than even.

But here comes the most depressing part. According to the Washington Post, there is a ready market of parents eager to let the “advanced artificial intelligence” violate the privacy and dignity of babysitters such that they can reduce candidates to a single score.

But Diana Werner, a mother of two living just north of San Francisco, said she believes babysitters should be willing to share their personal information to help with parents’ peace of mind.

“A background check is nice, but Predictim goes into depth, really dissecting a person — their social and mental status,” she said.

Where she lives, “100 percent of the parents are going to want to use this,” she added. “We all want the perfect babysitter.”

“The perfect babysitter” sounds like a title of a horror movie. And that’s pretty apt, considering that psychopaths probably wouldn’t have much trouble manufacturing that wholesome appearance of goodness on some fake-ass social media accounts.

But I’ll excuse Ms Werner. Maybe she’s not in the technology business. When you have Musk and Hawking waving their hands wildly about how The Great AI is going to render us all slaves so soon that we need emergency legislation about it, I can’t fault people for thinking that it’s already good enough to assess the personality of a babysitter.

I have a much harder time excusing technologists who really should know better. Here’s Chis Messina endorsing the idea that every parent should be using Predictim or the like, EVEN IF IT DOESN’T WORK:

I mean, this is inevitable, even if the recommendations are "questionable". What parent WOULDN'T consult such a service if it were widely available? https://t.co/6yiUDduRZx

— 𝙲𝚑𝚛𝚒𝚜 𝙼𝚎𝚜𝚜𝚒𝚗𝚊 (@chrismessina) November 24, 2018

As I said, the AI apocalypse has already begun. The bugle has been blown, and parents like Ms Werner and Mr Messina are only all too eager to indulge in whatever monstrous invasions of privacy and dignity the Great AI in The Cloud demands.

But it’s not too late to fight back. Unlike when Skynet one day might take over, we actually have all the advantages in the fight of today. We can chose to reject, regulate, and ridicule when AI-branded algorithms turn against our better nature. Progress isn’t a straight line, and there’s a lot of dead ends en route.

The Great AI in the Cloud is not yet an omniscient being, and we absolutely should question how it works in all of its mysterious ways. Chances are good that when we do, we see it’s just two rubber bands of questionable ethics holding together four cans of bullshit.