Some of you may have noticed over the past week or so that Basecamp has felt a bit zippier. Good news: it wasn’t your imagination.

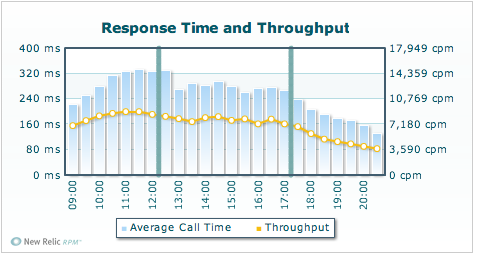

Let’s set the stage. Below, I’ve included a chart showing our performance numbers for Monday from four weeks ago. We can see that at the peak usage period between 11 AM and Noon Eastern, Basecamp was handling around 9,000 requests per minute. In the same time period, it was responding in around 320 ms on average or roughly 1/3 of a second. I know quite a few people who would be very pleased with a 320ms average response time, but I’m not one of them.

June 29, 2009 – 09:00 – 21:00 EDT

June 29, 2009 – 09:00 – 21:00 EDT

For months, we’ve been running our applications on virtualized instances. We have a bunch of Dell 2950 servers, each with 8×2.5 GHz “Harpertown” CPU cores (Intel Xeon L5420) and 32GB of RAM that we use to run our own private compute cloud. A typical Basecamp instance in this environment has 4 virtual CPUs allocated to it and 4GB of RAM. At the time the chart above was created, we had 10 of these instances running.

For some time I’ve wanted to run some tests to see what the current performance of Basecamp on the virtualized instances was versus the performance of dedicated hardware. I finally found the time to run these tests a few weeks ago. We had just ordered a few new Dell 2950 virtualization servers and I decided that I would run my test on one of them before putting it into production in our private cloud.

Similarly, I’ve been curious to see how the newest Intel Xeons, code named “Nehalem”, would perform with a production load. Since we host all our infrastructure with Rackspace it was a pretty simple matter to get a new Dell R710 installed in our racks to include in the testing. The R710 was configured with 8×2.27 GHz “Nehalem” CPU cores (Intel Xeon L5520) and 12GB of RAM.

Once we had the servers in place, I quickly installed a base operating system and configured them to act as Basecamp application servers using our Chef configuration management recipes and put them into production. To make a long story a little less long, we saw some pretty extreme performance improvements from moving Basecamp out of a virtualized environment and back onto dedicated hardware.

The Nehalem vs. Harpertown battle was a bit harder fought, with the R710 giving roughly a 20-25% response time increase versus the older 2950 as long as they both still had excess CPU capacity. While that was an interesting number, I suspected that it didn’t tell the whole story, and I wanted to see how they performed when they got close to being saturated. Throughout the day, I increased the load that the R710 and 2950 were handling until I was able to saturate the 2950 and see response times start to degrade rapidly. When I reached that point, the R710 still had roughly 30% of it’s CPU capacity idle.

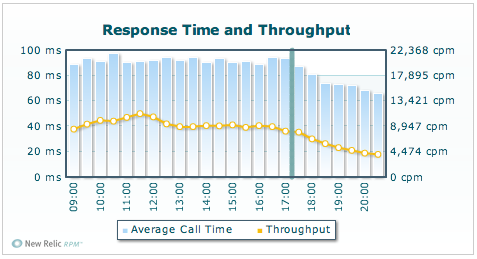

Armed with these numbers, we decided to get some additional R710 servers and move all of Basecamp’s application server processing to them. A week or so later, that transition is complete and we end up with the chart below.

July 27, 2009 – 09:00 – 21:00 EDT

July 27, 2009 – 09:00 – 21:00 EDT

Narrating this chart like I did the one above, we see that the peak traffic was between 10AM and 11AM Eastern, with Basecamp handling a little over 11,000 requests per minute. During this time period, the average response time remained a hair under 100ms, or one 1/10 of a second.

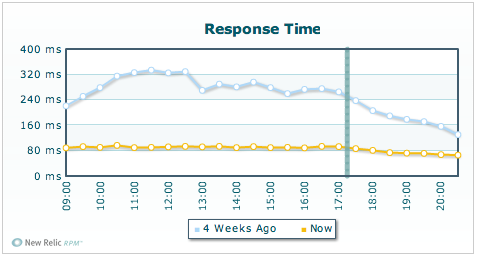

We were able to cut response times to about 1/3 of their previous levels even when handling over 20% more requests per minute.

July 27, 2009 vs. June 29, 2009.

July 27, 2009 vs. June 29, 2009.

We’ll continue to strive to make Basecamp and our other applications as fast as possible. We hope you’re enjoying the performance boost.

Special thanks to New Relic for the absolutely phenomenal RPM performance monitoring tool for Rails applications. It would have been much more difficult for me to run the performance tests I did without it. We use it to constantly monitor our application performance and it hasn’t let us down yet.

Bob Martens

on 28 Jul 09Wow! Nice work on your end.

We had an “argument” with some consultants about the pros and cons of virtualization for some mission-critical servers here at work and luckily won out. Cool to see some more support in certain situations.

Greg

on 28 Jul 09Thanks for the write-up on your tests Mark! Always great to see behind the curtain.

It would have been interesting to see a head-to-head comparison of the physical 2950 with 4 CPUs/4GB of RAM vs a 2950 running virtualized with 4 vCPUs/4GB of RAM. It seems pretty obvious that Basecamp would run faster on a physical machine with more CPUs and memory than your virtual instances of Basecamp.

I was also wondering about how saturated your virtual instances were getting at peak times. Would you have seen a similar decrease in response time had you added a few more virtual instance of basecamp?

David

on 28 Jul 09I’ll trust that things got faster but your writeup isn’t terribly clear. Did you isolate each change to your environment (moving out of virtualization and the processor change) separately. And when you moved out of virtualization, was your dedicated server the same specs as the virtualized server?

Ken Collins

on 28 Jul 09Thanks for the post Mark! Good stuff.

MI

on 28 Jul 09Greg: When I did the initial test, we compared our traditional virtual instances versus the dedicated 2950 and R710. Obviously, the dedicated machines had double the number of CPU cores so we took that into account by sending 2x as much load to them as we did to the virtual instances. Then I took it a step further and sent 4x more traffic to the dedicated machines and I still saw better performance than the virtual instances.

The virtual instances were not all that saturated, they reported on average 40-50% idle CPU.

MI

on 28 Jul 09David: Yes, I tested each change in isolation, but this article would have been much longer if I had dived into each iteration, I just wanted to hit the high points. The dedicated 2950 that we tested was identical hardware to the servers that run the virtualized instances. Obviously, this means that it had more CPUs available to it (8) than the virtualized instances did (4) but we attempted to compensate for this in the testing by skewing the traffic toward the more powerful physical server.

For the record, the point of the testing wasn’t to produce an academic research paper, but to evaluate ways of making Basecamp perform faster. I think it’s fair to say that mission was accomplished. :)

Jordan Gross

on 28 Jul 09Can you tell us what virtualisation software you were using before? We’ve tested all of the majors over the years and there is a big disparity in performance of virtual servers…

MI

on 28 Jul 09Jordan: In this case, the virtualization was using Linux KVM. These results should also be applicable to Xen, though. We had instances up in both Xen and KVM environments for months and there was no measurable difference in Basecamp performance between the two.

Brice Ruth

on 28 Jul 09Mark: are you sure you’re reading the RPM charts correctly? From what I can see, in your first chart (4 wks ago), you’re running a peak throughput of ~8,800 CPM and now your peak is ~10,500 CPM (hard to say exactly with the y2 scale).

Certainly the avg response times speak for themselves, though, they have definitely come down, but I don’t think that you’re quite under 1.5x the load at this point, at least, the yellow line (which should be against the y2 axis) doesn’t seem to indicate that.

-Brice

MI

on 28 Jul 09Brice, you’re exactly right. My only defense is that I was writing this late at night and not paying enough attention. I’m going to edit the post to correct the numbers. Thanks for the gentle prod, I totally botched those numbers when I originally wrote the post.

The month ago peak was 8,971 requests per minute and the peak from yesterday was 11,184 for a 24.6% increase over a month ago at 1/3 the response time.

Jordan Gross

on 28 Jul 09So I would suspect that you’d find a far less significant performance difference difference on other Virtualisation platforms (We actually run all our Managed Hosting customers on VMWare ESXi and when comparing and contrasting this vs “dedicated” physical machines, we get a low single digit difference in performance)

MI

on 28 Jul 09Jordan: I believe that low single digit difference is going to vary greatly depending on the workload, with heavily CPU bound workloads suffering significantly more due to contention on the CPU’s cache. This is particularly bad when virtual CPUs are not bound to physical ones and are allowed to float between physical processors, but can be mitigated in some virtualization environments.

My main problem with VMware is that it costs more than the actual server does.

Tim

on 28 Jul 09Did I just read that correctly?

You did a performance comparison of Virtualization on older hardware with less memory vs Dedicated hardware on much newer and about 3x more memory.

Doesn’t seem like an apples to apples comparison at all.

Tim

on 28 Jul 09@MI

Of course buying new and much faster hardware with more memory will produce lower/faster response times (regardless if virtualization was used or not).

MI

on 28 Jul 09Tim: I compared the following:

1. Virtualization on Dell 2950 with 2 x Xeon 5520, 32GB RAM. Instance size was 4 VCPUs, and 4GB of RAM

2. Dedicated Basecamp instance on identical Dell 2950 above, no virtualization

3. Dedicated Basecamp instance on Dell R710, 2 x Intel Xeon 5420, 12GB RAM

RAM was not a factor at all, the virtualized instances were under no RAM pressure whatsoever. The only reason the dedicated box needs more RAM is because it has more CPU capacity and I need to run more processes to make use of it. Most of the RAM is sitting unused, but it’s easier to add it up front than take a downtime and RAM is dirt cheap.

With the dedicated 2950, taking 4x the traffic as the virtualized instance, the response times were still less than half that of the virtualized instance on identical physical hardware. The virtualized instance was only allowed to use 4 cores, but the physical server had to handle 4x the traffic which more than compensates, in my estimation.

The fact that newer hardware performed better than older hardware was not at all surprising. The interesting thing was the degree to which it improved response time and throughput. A 66% reduction in the response time while handling multiples of the traffic is beyond what I expected.

Doug

on 28 Jul 09Strangely, I’ve been thinking that my project within Basecamp has been slower this past week.

Hmm … strange.

I’m using Chrome if that makes a difference (?)

Andrew

on 28 Jul 09A few points – 1. KVM is probably the worst performer of the bunch, especially under load

2. Vmware ESXi has a free edition which is actually very powerful

3. Four vCPUs per guest may be the slowest configuration to test. Generally speaking the more vCPUs per guest the more scheduling headaches you are giving to the hypervisor, which in the case of KVM is the least equipped to deal with the problem.

I would be testing again with 2 vCPU per guest and even 1 vCPU per guest (and make up for it by doubling or quadrupling the number of guests). I would also test Xen or ESXi. With paravirtualised network and disk drivers, and hardware-assisted MMU virtualisation (ie. in the new Nehalem Xeons) there can be very little performance difference in VMware.

Most VPS hosting providers use Xen or VMware and not KVM for a reason!

Russ

on 28 Jul 09Mark, thanks for that. I do a ton of virtualization.

Adding to what someone else said, I’ve found that, depending on the application, sometimes you get less performance when you increase the number of vcpus.

Have you done any testing in that area?

Interesting piece.

MI

on 28 Jul 09Andrew,

1. Not in my experience of testing our production load on identical hardware comparing Xen vs. KVM. Our KVM instances perform essentially identically to the Xen instances that they replaced. I’m willing to believe there are use case where this isn’t so, but ours isn’t one of them.

2. Yes, I’m aware of that but if you want any of the management software you have to shell out $$$$$.

3. Again, Xen didn’t perform any better than KVM with 4 VCPUs for our workloads. Regardless of that, even with good configuration management tools like Chef, I’m not going to run 40 guests when I can support the same load with a handful of physical servers. That just doesn’t make sense and seems to be a perfect argument for physical over virtual when the workload becomes remotely CPU intensive.

Lastly, most VPS hosting providers use Xen because KVM either didn’t exist or wasn’t very mature when they started offering hosting and VMware is cost prohibitive. I tested KVM vs. Xen pretty exhaustively in our environment and we just completed a long term project to convert all of our guests from Xen to KVM because there is no long term future for support for Xen in the Linux distributions we’re interested in using.

That said, I will always continue to evaluate different options and if there’s a better fit down the road, we’re not afraid to change course. I’ve been meaning to take a closer look at the free VMware ESXi for quite some time.

Again, the point of my blog post wasn’t to compare virtualization vs. physical performance or to downplay the utility of virtualization. We still run quite a few machines dedicated to virtualization and dozens of virtual instances and I don’t see that changing anytime soon. The point was that we saw an opportunity to improve Basecamp’s performance and we took advantage of it, it’s as simple as that.

Tim

on 28 Jul 09@MI

But that’s exactly what you did and imply that dedicated is faster than virtualization.

MI

on 28 Jul 09Tim: I didn’t mean to imply it, but I will I say it straight out: Dedicated is faster than virtualization. But it’s still not the point.

Alasdair Lumsden

on 28 Jul 09Hi Mark,

I’d recommend checking out Solaris’s native virtualisation technology, Solaris Zones.

Solaris Zones are akin to FreeBSD Jails, except they’re far more powerful and have a full management framework. There’s virtually no virtualisation overhead (mind the pun!), because instead of running a separate kernel for each VM as with Xen/VMWare/KVM, Solaris simply containerises the processes. Disk & Network IO doesn’t have to pass through a virtualisation layer so there’s no performance drop. For example you can run a high performance Oracle DB in a Zone – something you would never consider doing with Xen/VMWare/KVM!

It’s a serious enterprise solution and I’d recommend looking at it if you have the time and resources.

Joyent in the states and EveryCity in the UK (which I’m involved with) both use Solaris to provide cloud computing environments. I can’t talk for Joyent but we switched from being a Linux focused company to being a Solaris one over a year ago, and we’ve been blown away by the advantages. ZFS, Zones, Service Management Framework, Fault Management, DTrace, Comstar (iSCSI/FCoE) and Crossbow (High performance virtualised network stack) are all killer features that come together to make Solaris a killer platform.

And no, before you ask, I’m not paid by Sun to say this :-)

Good luck with the work to make Basecamp faster and better!

Jacob

on 28 Jul 09“Private cloud”? (Head explodes!)

Daniel Draper

on 29 Jul 09Where do you run your database server? We also have Dell 2950s running Xen but two of the 2950s are dedicated DB servers running on the raw iron. I suspect the performance is IO bound and having the DB right on the hardware helps massively!

MI

on 29 Jul 09Daniel: We run our databases on 2950s and R900s with no virtualization they’re all dedicated. The servers that I’m talking about in this article are just the application servers that host the Basecamp Rails app.

Mark

on 29 Jul 09Thank you for the very thorough research effort and documentation of the interesting results. Not sure what caused people to virtualize web servers to begin with, but nice to see some solid analysis to support Ruby/Rails’ maturing ability to take full advantage of modern software/hardware.

Ali

on 29 Jul 09Next thing we know , you will be rewriting all your Apps in C++ to make’em faster!

But what about developer productivity! Wasn’t virtualization good enough! Why make things faster when they are fast enough!

Chris Nagele

on 29 Jul 09Great post. I love getting the details like this so we can learn about growing our own products.

We’re migrating Beanstalk to Rackspace in the next few weeks with a dedicated environment and a bunch of 2950 ESX servers, so it is nice to see you have a similar setup. We looked at the R900’s as well, but will hold off until later.

Bernd Eckenfels

on 30 Jul 09Why would you use virtulisation in the first place?

For a stateless, horizontally scaled app server where you have an automatic deployment solution I dont see any reasons for that.

Greetings Bernd

Morne

on 30 Jul 09I’m curious – why did you opt for full virtualization technologies such as Xen or KVM rather than an operating system virtualization technology such as OpenVZ which runs much lighter / performant, especially since you seem to have a homogenous environment with regards to OSes (all Linux) ?

Any specific reason ?

Zach

on 31 Jul 09Awesome article, thank you for looking out for your users and the future. We are all very greatfull. I think this is yet another reason why paying for a quality application like Basecamp is so needed. Great job!

This discussion is closed.