Nearly 3 years ago we asked “What would happen if a truck crashed into the datacenter?” The resulting discussion could be summarized as “Well we would probably be offline for days, if not weeks or months. We wouldn’t have many happy customers by the time Basecamp was back online.” No one was satisfied with that answer and, in fact, we were embarrassed. So we worked really hard to be prepared with an answer that made us proud.

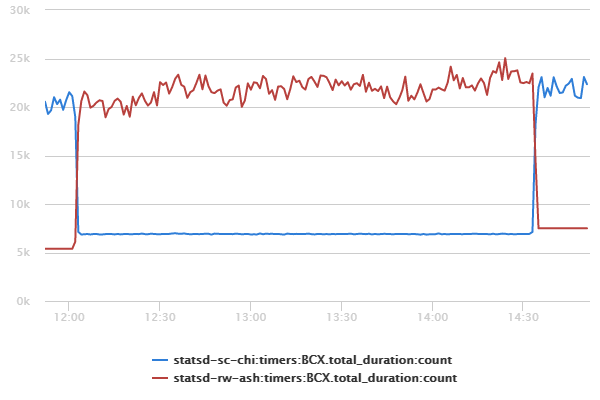

This past Sunday, February 15th 2015, we demonstrated that answer in public. With one command we moved Basecamp’s live traffic out of our Chicago, IL datacenter and in to our Ashburn, VA datacenter in about 60 seconds.

Not one of our customers even noticed the change, which is exactly as we planned it. A few hours later we ran one command and moved it all back. Again, no one noticed.

This probably qualifies as the least publicly visible project at Basecamp. And we hope it stays that way. But if it doesn’t, just know Basecamp will be online even if disaster strikes.

(There’s much to share about how we accomplished this and what we learned along the way. I’ll share the technical nitty gritty in future posts.)

Bobby S.

on 19 Feb 15Which datacenter are you guys running out of in Ashburn?

James

on 19 Feb 15Nice to see it working so slick. I must admit I’m a little surprised you haven’t had a viable and fast disaster plan until now though with so many customers relying on your services.

DHH

on 19 Feb 15James, we’ve had a backup data center for years. We’ve also had many backup options should something like this happen (like off-site backups of databases and files), but we haven’t boiled it down to something that can run in 60 seconds. Very, very few companies have a solution like this at the moment. Ask any of your other hosted software providers, and I think you’ll see that hardly any of them have an automated contingency for a complete data center outage.

James

on 19 Feb 15@DHH, cool, really impressive getting it boiled down to something so simple and fast

Taylor

on 19 Feb 15@Bobby Raging Wire East and a little bit of Equinix DC2.

Ruud

on 19 Feb 15Wow :) I don’t think you are using DNS to do the switching, so what is it? Cloudflare?

GregT

on 19 Feb 15Hopefully someone will be around (with a computer) to execute that “one command” when the time comes.

John

on 19 Feb 15@Ruud The switching is all DNS based with very short TTLs.

Taylor

on 19 Feb 15@Ruud What John said is 98% true. The other 2% is the stuff he didn’t mention that happens behind the scenes. That 2% is where the rubber meets the road.

Felipe

on 19 Feb 15So far so good! But why you don’t run in two datacenters? I mean, why you don’t run in multiples servers between multiple datacenters in order to switch automatically?

Thanks for the article!

Ruud

on 19 Feb 15But not all providers respect the short TTL settings, right?

Justin

on 19 Feb 15How big is the data loss window? Or, how far (approx) are the async MySQL replicas lagging behind?

Bryan

on 19 Feb 15What if both data centers go out simultaneously? I know that’s stretching it and there is only so much you can do. Just curious if have now asked that question and if so, did you come up something for that?

DHH

on 19 Feb 15Bryan, we have all files and backups in a 3rd location as well. So that extremely unlikely contingency is also addressed, but we wouldn’t be back online in 60 seconds.

This discussion is closed.