Last week I set out to improve the performance of the Dashboard and Contacts tabs in Highrise. Both tabs would frequently be much too slow. Especially the Contacts tab, which for our own account some times could take upwards two seconds to load.

The number one rule for improving performance is to measure, the number two rule is to measure some more, and the third rule is to measure once again just to be sure. Guessing about performance never works, but it’s a great excuse to get you out in the weeds chasing phantom ponies.

Looking outside the epicenter

So I measured and found that part of the problem was actually not even part of the epicenter, the notes and the contacts. In fact, we were wasting a good 150ms generating New Person/Company form sheets all the time (through a complicated Presenter object that’s high on abstraction and low on performance). Even though these sheets were the same for everyone.

That left me with two choices: Either I could try to speed up the code that generated the forms or I could cache the results. Since speeding up the code would require taking everything apart, bringing out the profiler, and doing lots of plain hard work, I decided to save myself a sweat and just cache. People using Highrise couldn’t care one way or the other as long as things got faster and frankly, neither could I.

I ended up with this code:

<% cache [ 'people/new/contact_info', image_host_differentiation_key ] do %> <%= p.object.contact_info.shows.form %> <% end %>

This cache is hooked up to our memcached servers for Highrise. The image_host_differentiation_key makes sure that we don’t serve SSL control graphics to people using Safari/Firefox, but still do it for IE, in according to our asset hosting strategy.

Good enough performance

But saving 150ms per call wasn’t going to do it. So I added memcached caching to the display of the individual contacts and notes as well. The best thing would of course be if I could cache the entire page, but since Highrise is heavy on permissions for who can see what, that would essentially mean per-user caching. Not terribly efficient and hard to keep in synch. So instead we just cache the individual elements and still run the queries to check what you can see.

It’s not the fastest approach in the world, but remember that performance optimization is never about the optimal, it’s about the good enough. Performance is a problem when it’s a problem, but otherwise it’s just not relevant. People are not going to feel the difference between a page rendered in 50ms and one rendered in 100ms, even though that’s a 100% improvement. Especially not when you consider that each Highrise page also loads a bunch of styles, javascripts, and images. It’s just not relevant at that point.

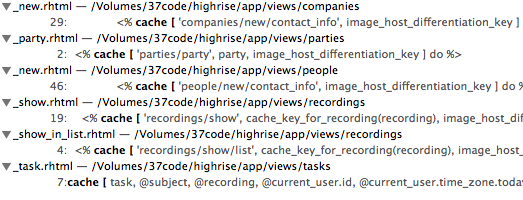

All that was needed in the end to make Highrise considerably faster was these five caching calls we do in the view:

This helped bring pages that before could easily take over a second down to 100-400ms range. Much more acceptable. Our general rule of thumb is that most pages should render their HTML on the server in less than 200ms and almost all in less than 500ms. That feels like a good compromise of good enough performance. Of course we have lots of actions rendering in way less than that and also some that are still above that range.

Accidental gains

As I pushed these improvements live, I was tailing the production logs to get a cursory overview of how the caching was improving repeated calls. That turned out to be proven nicely so, but I also noticed something else. Generating the Atom feeds that I kept seeing in the log was taking an awful long time. Many would take 500ms or so. Nasty when you see the same request come in again and again!

Thankfully Highrise had just been updated to Rails 2.2 as part of this improvement run anyway, which meant that we had access to the new HTTP freshness features. I quickly added a few ActionController::Base#stale? calls and immediately saw the beauty of “304 Not Modified” responses flying back over the wire. Meaning that we were no longer regenerating a response for a client that already had the latest version. HTTP is peach!

I also noticed that we were fielding a lot of sorta-expensive API calls from a known 3rd party and gently wrote them an email asking for etag and last-modified header respect, so they wouldn’t tax our servers if they already had the latest info.

Together all of these changes lead to a ~30% drop in average response times as measured by New Relic. Not too shabby for a handful of caching calls.

Justin Reese

on 06 Jan 09David, thanks for this article. Just the sort of informative technical transparency I was hoping SvN would add/increase. Very cool.

John Topley

on 06 Jan 09Very nice. Thanks for the details.

Brennan Dunn

on 06 Jan 09Please keep these type of posts up.

Tim

on 06 Jan 09This is my favorite type of svn-post.

Michael

on 06 Jan 09Good combination of computer and social engineering, there. I remember giving up on Highrise about a year ago when it wasn’t loading quickly – these days it’s lightning fast.

DHH

on 06 Jan 09Thanks, Michael. The biggest improvement for Highrise came when we started to pay strong attention to the perceived end-user performance as advocated by YSlow. That should be the first stop on any performance improvement tour. Once you’re getting all A’s on their report, you can move on to this kind of nitty gritty stuff.

Mike Burke

on 06 Jan 09Echoing previous sentiments, these are the best type of posts on SvN. Keep it up!

Nick

on 06 Jan 09Once you get to think about it though, you understand that nothing’s wrong with those 500ms as long as they are stable and predictable.

But most companies out there would feel very shy and secretive about this kind of information — What? 500ms? Blame that on your browser, ISP, the Internet itself, cause our responses are blazing fast, we generate and send pages before receiving requests most of the time.

I just love this kind of posts! They are always just to the point and answer the most vibrant question that arises while reading SvN and Getting Real: those philosophy sounds exciting, but WHAT EXACTLY those guys are doing when real-world problem arises.

Tobin Harris

on 06 Jan 09I love these kinds of post too. There’s something incredibly invaluable and satisfying about skilled people (i.e. David) giving totally hands-on, tangible advice about how they tackle tough, yet common problems.

Question… Overall, how many man hours do you think it took for you to diagnose, plan and implement those improvements you mentioned?

I only ask because I’ve been budgeted 1/2 a day on such problems and got NOWHERE!

Paul

on 06 Jan 09Good post! Thanks.

Recently I saw this screencasts. It’s about designing fast websites, but is mentioning some data on performance in relation to user actions. In her talk she mentioned a 1% drop in sales for Amazon after adding 100ms to the response times.

http://yuiblog.com/blog/2008/12/23/video-sullivan/

Swami Atma

on 06 Jan 09Now that’s a great post. Please keep technical posts coming. Thanks.

Matt B

on 06 Jan 09Thanks for mentioning YSlow – looks like a great tool.

Please keep these type of posts coming to SVN!

rohandey

on 07 Jan 09Thanks, it is definitely a good start up performance improvement lesson for me.

Eduardo Sasso

on 07 Jan 09Nice post. It’s always nice to read about the strategies used to improve performance and stuff like that. I would like to see more posts about it.

Nathan de Vries

on 08 Jan 09Hi David,

It appears as though you’ve overloaded ActionView::Helpers::CacheHelper#cache with your own implementation. Are there any gotchas to using ActionController::Caching::Fragments & configuring ActionController::Base.cache_store to use :mem_cache_store? Or alternatively, using Chris Wanstrath’s cache_fu?

Ben

on 08 Jan 09Just a quick nit: 50ms to 100ms would count as 100% worse, but 100ms to 50ms is “only” a 50% improvement. You’d have to achieve 0ms to improve by 100%. :) (Your point is still absolutely valid of course. And to reiterate the comments of others, these kinds of posts are great, thanks!)

Morten

on 08 Jan 09@nathan – the cache [ array, here ] do syntax is Rails 2.2

Tim Q

on 11 Jan 09What are you using to measure how long it takes to load? Just a stopwatch, or is there some automated utility that I’m missing out on here?

Mike Larkin

on 12 Jan 09Great post!

Not sure who maintains the API documentation, but the example for stale? (http://api.rubyonrails.org/classes/ActionController/Base.html#M000518):

if stale?(:etag => @article, :last_modified => @article.created_at.utc)

should actually be

if stale?(:etag => @article, :last_modified => @article.updated_at.utc)

because created_at will always return the same value, and we’re interested in when the object was last modified.

This discussion is closed.