Last week I wrote about Audi’s customer satisfaction survey. The numbers and words just didn’t mesh. And there were dozens of questions – many of which were difficult to rate according to their given scale. I didn’t end up filling it out and deleted it from my inbox.

This week I got another survey from another company. This one was from Zingerman’s – the famous Ann Arbor-based deli. I’d recently purchased some olive oil, vinegar, and mustard from their site.

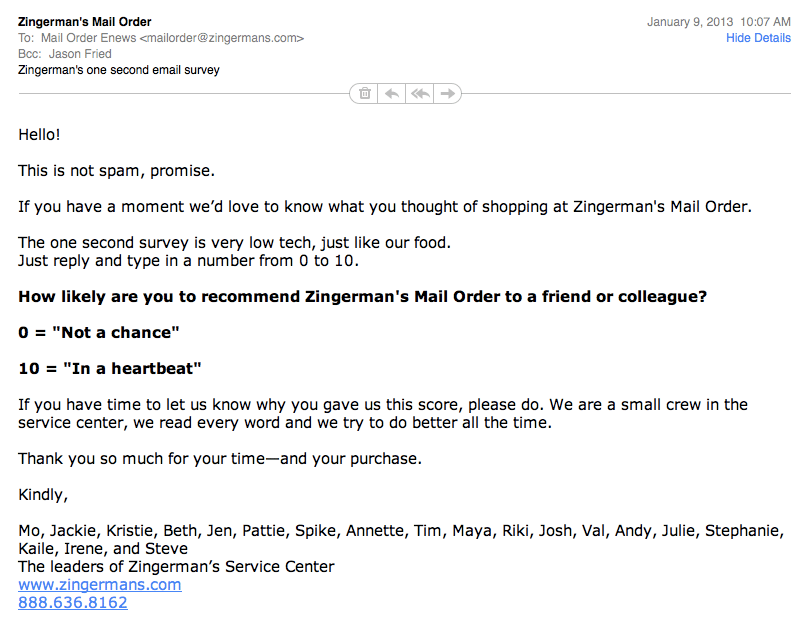

Here’s the email they sent:

That’s a fantastic email. Short, friendly, clearly written by someone who understands tone, brand, and how to get feedback that’s useful. No tricks. Yes, it’s automated, and signed by a team, but that’s fine. It was originally written by someone who cares. It’s consistent with Zingerman’s casual catalog voice, too.

They have a 0-10 scale just like Audi. Except they only have one question. “How likely are you to recommend Zingermans?” That question sums up just about everything. They consider 0 “not a chance” and 10 “in a heartbeat”. The rest is up to you.

And they don’t ask you to click over to a web-based survey somewhere. They just say, hey, reply to this email with a number and, if you have time, let us know why you gave us this rating. Your reply is your answer, that’s it. There’s nothing else to do and nowhere else to go. Easy.

Then they say: “We are a small crew in the service center, we read every word and we try to do better all the time.” That alone makes me want to give them feedback. I know I’ll be heard. I believe I’ll be heard. The Audi survey? It feels like it’s going straight into a database. I’m an aggregate stat, not a person, not a customer.

It would be easy to say that Audi’s survey will give Audi more detailed feedback. More data points attached to specific experiences. And it would be easy to say that Zingerman’s question is too broad, too difficult to act on a “7” with no other information.

But I’d wager that Zingerman’s gets more useful feedback than Audi gets. That one question – answered simply with a reply to an email – probably leads to more valuable, subtle feedback than the dozen-question, extremely detailed , slippery Audi survey.

The Zingerman’s survey feels like it’s written by someone who’s curious about the answer. The Audi survey feels like it’s written by someone who’s collecting statistics. Which company do you think really cares more?

MR

on 10 Jan 13It’s fun, personal move that they signed it with everyone’s name from the Zingerman’s team, and not just a generic “The Zingerman’s Team.”

Andy T

on 10 Jan 13Zingerman’s did well by sending you, particularly, that poll. Also, they have “Gifts of Meat”, and how can you go wrong with that? Nothing says “I love you” like a rasher of bacon.

Gurnick

on 10 Jan 13It is so simple that I would want to fill it out and share additional information and would be interested in how others responded.

Simple always wins!

Scott

on 10 Jan 13Check out a book called “The Ultimate Question”. In addition to simplicity, Zingerman’s is being more efficient and (if you buy into the premise presented in the book) effective.

Christiano Kwena

on 10 Jan 13I like the approach by Zingerman’s, to offer only one mandatory step, only one. If someone gives me just a choice and a maybe that adds to that choice, I will of course take up the choice because I have nothing else to wander on.

Erica Duncan

on 10 Jan 13That’s great. I received a similar e-mail that struck a positive chord with me. I signed up to a mailing list for an inbound marketing company, Optify. A few days after registering and receiving some content, I received an e-mail from one of the associates asking me to put myself in a bucket, someone that (1) doesn’t want Optify’s help, (2) is interested in learning about what Optify has to offer, or (3) could definitely use Optify’s services. I put myself in the 1st category and the same associate thanked me for my time and said I should feel free to reach out if I need more info. I appreciated the thoughtful way in which Optify followed up with me.

TC

on 10 Jan 13They are likely just trying to calculate the well known “Net promoter score” which is people that recommend minus people that wouldn’t (which means you can have a negative score). Best practice is to followup with a request for more detailed information.

Companies like NPS because its easy to calculate and compare across companies.

Curious

on 10 Jan 13What web mail client is it in the screenshot?

Brett Hardin

on 10 Jan 13The results of this survey are being used for netpro scores. Many companies put a lot of weight into this score as being the main KPI of their company.

@Curious

on 10 Jan 13That would be the standard Mail application that comes with OS/X Mountain Lion.

Dave M

on 10 Jan 13The glaring problem with this question (which appears on most surveys) is that I am not likely at all to recommend anything to my friends, so even if I had a great experience, I would still give it a zero. I don’t care where you shop.

Anonymous Coward

on 10 Jan 13the fallacy in all this is thinking that either of them really care about the survey results or statistics at all, its all just to get the brand out in front of you again I was once paid to compile survey results collected by an ad agency for their client – they wan’t totals and they wanted all hand-written notes at end of surveys compiled into one long document – guess what they “used” my work for? to try and convince the client that they needed more marketing, that the results were not succinct and predictable, that their customers were all over the place about everything on the super long 50 question survey – in the end, surveys like this are things not to be “reworked” but to literally be ABANDONED as a complete waste of time and the continuing non-creative brainchild of a ad/marketing mind still caught up in yesterday

criteria

on 10 Jan 13I would be annoyed to receive this silly email from Zingermans. And according to a recent movie about Ann Arbor, it seems like people get “busy” in the fixings at night :)

Mo

on 10 Jan 13I don’t know if it’s bad internet etiquette to jump in as the guy who wrote the survey email and uses it in the business but I thought why not.

We do use the number answer to calculate net promoter. That number isn’t terribly helpful, but Jason is right, the comments we get — about half the responders say something — are often really useful. They’ve definitely helped us decide what parts of our website and business to change and not change (some things we think are problems no one ever mentions). We don’t have an ad agency or anything. The emails replies go right back into our office. We do everything manually and share any comments in real time as they come in with some of our crew and then compile them so we can see larger trends over time.

Panagiotis Panagi

on 10 Jan 1310. Its posts like this that keep SvN in my Google Reader.

Josh

on 10 Jan 13Jason, I’m curious how this could effect engagement metrics and am surprised it wasn’t brought up in the article. I know if you use Gmail and reply to an email, the address you replied to automatically gets added to your address book. If you have a sender’s address in your contact list, is pretty much ensures future messages will get delivered to your inbox. I feel as though this survey idea is good for both collecting feedback and getting recipients to engage in your email. Is this a valid point?

Jason Fried

on 11 Jan 13Mo – it’s great that you jumped in. Thanks for sharing.

Chandrashekhar

on 11 Jan 13Hello,

This is not a spam comment, promise.

I had a moment (or lots of them) to read this whole thing and then the comments and then thought, hey, this is awesome! Well actually, the “this is awesome” thing hit me right after the first few lines following the email screenshot.

I did doubt if a question like this, with just a binary answer, would be really helpful but boy oh boy, this is amazing! People with a fetish for those strong statistics and analytics would call this silly but Mo and the team has figured the basic essence of selling: it’s about people and people buying and the buying experience…

By giving people a choice-less choice, you’re letting them say their words which is a far more important metric to improve the overall UX. awesome, guys.

And I can’t say how much the crowd is indebted to Jason’s posts these days. Thanks bows

Vesa M

on 11 Jan 13Here the Audi did that just-one-number thing. And it was a text message instead of email (harder to ignore, more guaranteed response, more personal – well in finnish culture at least).

nannasin

on 14 Jan 13it is a far more important metric to improve the overall UX. awesome, guys. http://www.hqew.net

FrankK

on 14 Jan 13A lot has already been said in previous posts, therefore I will try not to be redundant, but having worked at GE for 10+ years I am pretty familiar with NPS/Ultimate Question. I actually ran the process for a division of ours for a few years. There is certainly value in collecting this “score”, however the real value comes when you start using it in conjunction with a competitors score and looking at trends.

First, let me quickly explain how the score works. There are 3 classifications based on a customer response: detractor, passive and promoter. If someone scores you as a 0-6, then they are considered a detractor. If a 7-8, then passive, meanwhile a 9-10 would make them a promoter. However, you must then take it one step further…take the total number of detractors (example: 50) and subtract it from your promoters (example: 100) and you get a score of 50. Note, the passives are left out of the equation. Thus, your score for that survey period is 50. BTW, you can definitely have negative scores (e.g. -50).

Now, when doing a survey with competitors in mind, you use the same methodology, however you add a second question in: “how likely would you be to recommend XYZ company…” I’ve over-simplified this a bit, however I hope everyone gets the point.

This little number tells you a lot: 1. What’s your score this year… 2. What’s your score in comparison to other years…trends… 3. What’s your score vs. competition…trends 4. How does score break down by customer segments, etc., etc., etc.

Cheers, Frank

FrankK

on 14 Jan 13Slight correction: take the PERCENT who are detractors (of total), e.g. 25% and subtract from PERCENT who are promoters (of total), e.g. 30% and you arrive at a score of 5. NOT the raw numbers….

Sorry…something didn’t seem right when I first wrote…should not have been in such a hurry. All other points remain valid….

Sorry!

This discussion is closed.