Boney Money Brown.

You’re reading Signal v. Noise, a publication about the web by Basecamp since 1999. Happy !

Boney Money Brown.

It used to be one of the biggest pains of web development. Juggling different browser versions and wasting endless hours coming up with workarounds and hacks. Thankfully, those troubles are now largely optional for many developers of the web.

Chrome ushered in a new era of the always updating browser and it’s been a monumental success. For Basecamp, just over 40% of our users are on Chrome and 97% of them are spread across three very recent versions: 16.0.912.75, 16.0.912.63, and 16.0.912.77. I doubt that many Chrome users even know what version they’re on — they just know that they’re always up to date.

Firefox has followed Chrome into the auto-updating world and only a small slice of users are still sitting on old versions. For Basecamp, a third of our users are on Firefox: 55% on version 9, 25% on version 8. The key trouble area is the 5% still sitting on version 3.6. But if you take 5% of a third, just over 1% of our users are on Firefox 3.6.

Safari is the third biggest browser for Basecamp with a 13% slice and nearly all of them are on some version of 534.x or 533.x. So that’s a pretty easy baseline as well.

Finally we have Internet Explorer: The favorite punching bag of web developers everywhere and for a very good reason. IE represents just 11% of our users on Basecamp, but the split across versions is large and depressing. 9% of our IE users are running IE7 — a browser that’s more than five years old! 54% are running IE8, which is about three years old. But at least 36% are running a modern browser in IE9.

7% of Basecamp users on undesirables

In summary, we have ~1% of users on an undesirable version of Firefox and about 6% on an undesirable version of IE. So that’s a total of 7% of current Basecamp users on undesirable browser versions that take considerable additional effort to support (effort that then does not go into feature development or other productive areas).

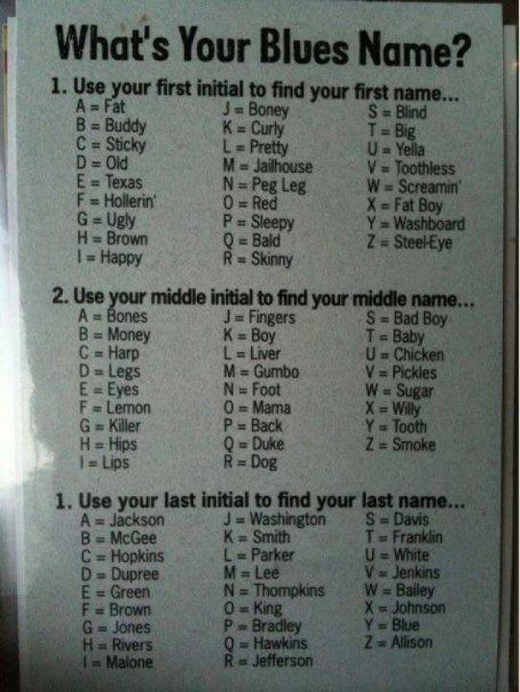

So we’ve decided to raise the browser bar for Basecamp Next and focus only on supporting Chrome 7+, Firefox 4+, Safari 4+, and, most crucially, Internet Explorer 9+. Meaning that the 7% of current Basecamp users who are still on a really old browser will have to upgrade in order to use Basecamp Next.

This is similar to what we did in 2005, when we phased out support for IE5 while it still had a 7% slice of our users. Or as in 2008, when we killed support for IE6 while that browser was enjoing closer to 8% of our users.

We know it’s not always easy to upgrade your browser (or force an upgrade on a client), but we believe it’s necessary to offer the best Basecamp we can possibly make. In addition, we’re not going to move the requirements on Basecamp Classic, so that’ll continue to work for people who are unable to use a modern browser.

Basecamp Next, however, will greet users of old browsers with this:

We send a lot of mail for Basecamp, Highrise, Backpack, and Campfire (and some for Sortfolio, the Jobs Board, Writeboard, and Tadalist). One of the most frequently asked questions we get is about how we handle mail delivery and ensure that emails are making it to people’s inboxes.

First, some numbers to give a little context to what we mean by “a lot” of email. In the last 7 days, we’ve sent just shy of 16 million emails, with approximately 99.3% of them being accepted by the remote mail server.

Email delivery rate is a little bit of a tough thing to benchmark, but by most accounts we’re doing pretty well at those rates (for comparison, the tiny fraction of email that we use a third party for has had between a 96.9% and 98.6% delivery rate for our most recent mailings).

We send almost all of our outgoing email from our own servers in our data center located just outside of Chicago. We use Campaign Monitor for our mailing lists, but all of the email that’s generated by our applications is sent from our own servers.

We run three mail-relay servers running Postfix that take mail from our application and jobs servers and queue it for delivery to tens of thousands of remote mail servers, sending from about 15 unique IP addresses.

We have developed some instrumentation so we can monitor how we are doing on getting messages to our users’ inbox. Our applications tag each outgoing message with a unique header with a hashed value that gets recorded by the application before the message is sent.

To gather delivery information, we run a script that tails the Postfix logs and extracts the delivery time and status for each piece of mail, including any error message received from the receiving mail server, and links it back to the hash the application stored. We store this information for 30 days so that our fantastic support team is able to help customers track down why they may not have received an email.

We also send these statistics to our statsd server so they can be reported through our metrics dashboard. This “live” and historical information can then be used by our operations team to check how we’re doing on aggregate mail delivery for each application.

Over the last few years, at least a dozen services that specialize in sending email have popped up, ranging from the bare-bones to the full-service. Despite all these “email as a service” startups we’ve kept our mail delivery in-house, for a couple of reasons:

Given all this, why should we pay someone tens of thousands of dollars to do it? We shouldn’t, and we don’t.

Read more about how we keep delivery rates high after the jump…

Continued…As we announced at the beginning of the month, we’re always on a mission to improve our uptime. Inaccessible apps are the cause of much frustration and users don’t care whether that’s because they’re scheduled or not.

While publishing our own uptimes have been a great step towards getting everyone in the company focused on improving, we also wanted to compare ourselves to others in the industry. So since December 16, we’ve been tracking five other applications through Pingdom to compare and contrast.

The goal is to have the least amount of downtime and here are the results from the period December 16 to January 31:

Congratulations to Github for the number one spot on the list. We are definitely going to be gunning for them! We’ll publish another edition of this list in a month or so.

Today Matt Kent started at 37signals as the sixth member of our operations team. Previously Matt worked for 10 years(!) at Bravenet as a System Administrator handling application deployment and scaling tasks. Some of Matt’s managers and coworkers used phrases like “backbone of our team” and “a great person, and a fantastic engineer” to describe what working with Matt is like.

Recently Matt was selected as an Opscode MVP for his contributions to Chef not once, not twice, but three times. Less than a day after Matt’s first interview, he resolved both our Chef bug reports endearing him to the entire team. In addition, Matt has experience with multiple data center setups, automated deployment, monitoring, and just about every other area we touch.

Welcome Matt!

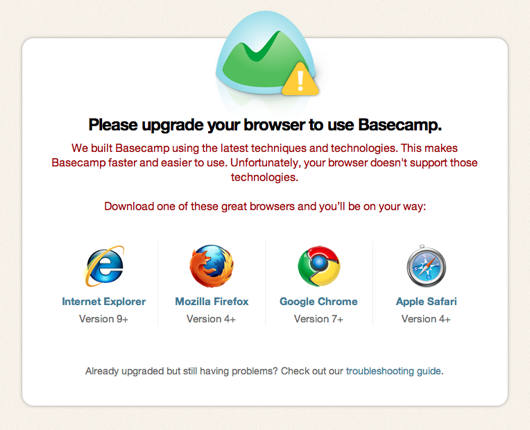

When I did all the programming for the original version of Basecamp back in 2003, we ended up shipping with just about 2,000 lines of code. A lot has happened in those eight years and we’ve acquired a more delicate taste of just how beautiful we want the basics executed.

This means significantly more code. Here are the stats from running “rake stats” on the Rails project:

On top of that we have just over 5,000 lines of CoffeeScript — almost as much as Ruby! This CoffeeScript compiles to about twice the lines of JavaScript.

Basecamp Next is running Rails 3.2-stable and we’ve got a good splash of client-side MVC in the few areas where that makes sense through Backbone.js and various tailor-made setups.

Got any questions about our stack or code base? Post them in the comments and we’ll fill you in.

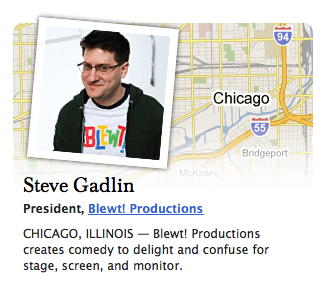

Steve Gadlin, Basecamp customer, received $25,000 from Mark Cuban on the television show SharkTank last Friday with his unique business idea: $10 cat drawings. He wants to draw a cat for you. What are you waiting for?

From the very start, we wanted Basecamp Next to be fast. Really, really fast. To do so we built a russian-doll architecture of nested caching that I’ll write up in detail soon. But for now I just wanted to share where all this caching is going to live as we just installed it at the hosting center.

It kinda reminds me of what pictures of a drug raid look like when they lay out all the coke and cash on the table, but this is what 864GB of RAM looks like:

Cost of the loot was $12,000.

Three years ago, I wrote about how improvements in technology keep allowing us to punt on sharding the Basecamp database. This is still true, only more so now.

We’ve grown enormously over the last three years but RAM keeps getting cheaper and FusionIO SSD’s keep getting faster. If anything, it seems like recent advances in SSD technology are accelerating and it’s ever more unlikely that we’ll need to shard Basecamp.

Basecamp remains a perfect candidate for sharding. Isolated accounts, no sharing between them. Yet the cost in increased complexity is constant while the cost of throwing hardware at the problem keeps dropping.

It’s like how some old people have a hard time dealing with inflation and “they want how much for a gallon of milk these days?”. Technologists who grew up when RAM cost $1,000 per megabyte can have a hard time dealing with the luxury of RAM being virtually free (we just bought about a terabyte worth of RAM for a Basecamp Next caching system that cost just around $12,000).

The progress of technology is throwing an ever greater number of optimizations into the “premature evil” bucket never to be seen again.

We just got our royalty statement from Crown and are pretty excited about the fact that we’ve sold over 200,000 copies of REWORK now. About three quarters of the sales have been hardcover books with audio and ebook splitting the remainder.

Thanks to everyone who helped us get here by buying and recommending the book. We are very grateful for your support in getting the word out.