When developers first discover the wonders of test-driven development, it’s like gaining entrance to a new and better world with less stress and insecurity. It truly is a wonderful experience well worth celebrating. But internalizing the benefits of testing is only the first step to enlightenment. Knowing what not to test is the harder part of the lesson.

While as a beginner you shouldn’t worry much about what not to test on day one, you better start picking it up by day two. Humans are creatures of habit, and if you start forming bad habits of over-testing early on, it will be hard to shake later. And shake them you must.

“But what’s the harm in over-testing, Phil, don’t you want your code to be safe? If we catch just one bug from entering production, isn’t it worth it?”. Fuck no it ain’t, and don’t call me Phil. This line of argument is how we got the TSA, and how they squandered billions fondling balls and confiscating nail clippers.

Every line of code you write has a cost. It takes time to write it, it takes time to update it, and it takes time to read and understand it. Thus it follows that the benefit derived must be greater than the cost to make it. In the case of over-testing, that’s by definition not the case.

Think of it like this: What’s the cost to prevent a bug? If it takes you 1,000 lines of validation testing to catch the one time Bob accidentally removed the validates_presence_of :name declaration, was it worth it? Of course not (yes, yes, if you were working on an airport control system for launching rockets to Mars and the rockets would hit the White House if they weren’t scheduled with a name, you can test it—but you aren’t, so forget it).

The problem with calling out over-testing is that it’s hard to boil down to a catchy phrase. There’s nothing succinct like test-first, red-green, or other sexy terms that helped propel test-driven development to its rightful place on the center stage. Testing just what’s useful takes nuance, experience, and dozens of fine-grained heuristics.

Seven don’ts of testing

But while all that nuance might have a place in a two-hour dinner conversation with enlightened participants, not so much in a blog post. So let me firebomb the debate with the following list of nuance-less opinions about testing your typical Rails application:

- Don’t aim for 100% coverage.

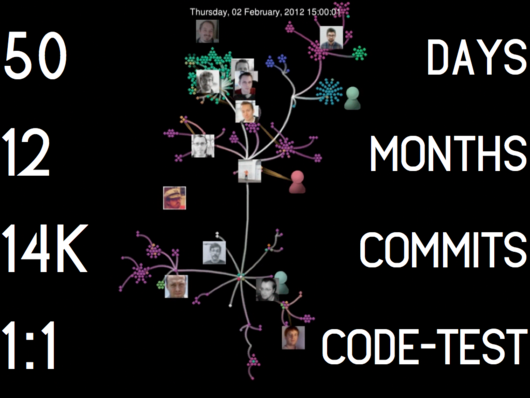

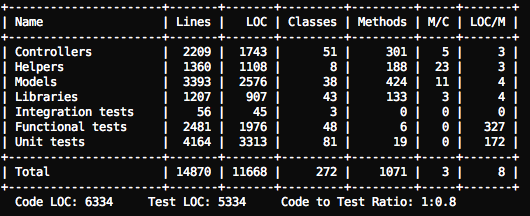

- Code-to-test ratios above 1:2 is a smell, above 1:3 is a stink.

- You’re probably doing it wrong if testing is taking more than 1/3 of your time. You’re definitely doing it wrong if it’s taking up more than half.

- Don’t test standard Active Record associations, validations, or scopes.

- Reserve integration testing for issues arising from the integration of separate elements (aka don’t integration test things that can be unit tested instead).

- Don’t use Cucumber unless you live in the magic kingdom of non-programmers-writing-tests (and send me a bottle of fairy dust if you’re there!)

- Don’t force yourself to test-first every controller, model, and view (my ratio is typically 20% test-first, 80% test-after).

Given all the hundreds of books we’ve seen on how to get started on test-driven development, I wish there’d be just one or two that’d focus on how to tame the beast. There’s a lot of subtlety in figuring out what’s worth testing that’s lost when everyone is focusing on the same bowling or bacon examples of how to test.

But first things first. We must collectively decide that the TSA-style of testing, the coverage theater of quality, is discredited before we can move forward. Very few applications operate at a level of criticality that warrant testing everything.

In the wise words of Kent Beck, the man who deserves the most credit for popularizing test-driven development:

I get paid for code that works, not for tests, so my philosophy is to test as little as possible to reach a given level of confidence (I suspect this level of confidence is high compared to industry standards, but that could just be hubris). If I don’t typically make a kind of mistake (like setting the wrong variables in a constructor), I don’t test for it.